Statistical Analysis in Research: Meaning, Methods and Types

Home » Videos » Statistical Analysis in Research: Meaning, Methods and Types

The scientific method is an empirical approach to acquiring new knowledge by making skeptical observations and analyses to develop a meaningful interpretation. It is the basis of research and the primary pillar of modern science. Researchers seek to understand the relationships between factors associated with the phenomena of interest. In some cases, research works with vast chunks of data, making it difficult to observe or manipulate each data point. As a result, statistical analysis in research becomes a means of evaluating relationships and interconnections between variables with tools and analytical techniques for working with large data. Since researchers use statistical power analysis to assess the probability of finding an effect in such an investigation, the method is relatively accurate. Hence, statistical analysis in research eases analytical methods by focusing on the quantifiable aspects of phenomena.

What is Statistical Analysis in Research? A Simplified Definition

Statistical analysis uses quantitative data to investigate patterns, relationships, and patterns to understand real-life and simulated phenomena. The approach is a key analytical tool in various fields, including academia, business, government, and science in general. This statistical analysis in research definition implies that the primary focus of the scientific method is quantitative research. Notably, the investigator targets the constructs developed from general concepts as the researchers can quantify their hypotheses and present their findings in simple statistics.

When a business needs to learn how to improve its product, they collect statistical data about the production line and customer satisfaction. Qualitative data is valuable and often identifies the most common themes in the stakeholders’ responses. On the other hand, the quantitative data creates a level of importance, comparing the themes based on their criticality to the affected persons. For instance, descriptive statistics highlight tendency, frequency, variation, and position information. While the mean shows the average number of respondents who value a certain aspect, the variance indicates the accuracy of the data. In any case, statistical analysis creates simplified concepts used to understand the phenomenon under investigation. It is also a key component in academia as the primary approach to data representation, especially in research projects, term papers and dissertations.

Most Useful Statistical Analysis Methods in Research

Using statistical analysis methods in research is inevitable, especially in academic assignments, projects, and term papers. It’s always advisable to seek assistance from your professor or you can try research paper writing by CustomWritings before you start your academic project or write statistical analysis in research paper. Consulting an expert when developing a topic for your thesis or short mid-term assignment increases your chances of getting a better grade. Most importantly, it improves your understanding of research methods with insights on how to enhance the originality and quality of personalized essays. Professional writers can also help select the most suitable statistical analysis method for your thesis, influencing the choice of data and type of study.

Descriptive Statistics

Descriptive statistics is a statistical method summarizing quantitative figures to understand critical details about the sample and population. A description statistic is a figure that quantifies a specific aspect of the data. For instance, instead of analyzing the behavior of a thousand students, research can identify the most common actions among them. By doing this, the person utilizes statistical analysis in research, particularly descriptive statistics.

- Measures of central tendency . Central tendency measures are the mean, mode, and media or the averages denoting specific data points. They assess the centrality of the probability distribution, hence the name. These measures describe the data in relation to the center.

- Measures of frequency . These statistics document the number of times an event happens. They include frequency, count, ratios, rates, and proportions. Measures of frequency can also show how often a score occurs.

- Measures of dispersion/variation . These descriptive statistics assess the intervals between the data points. The objective is to view the spread or disparity between the specific inputs. Measures of variation include the standard deviation, variance, and range. They indicate how the spread may affect other statistics, such as the mean.

- Measures of position . Sometimes researchers can investigate relationships between scores. Measures of position, such as percentiles, quartiles, and ranks, demonstrate this association. They are often useful when comparing the data to normalized information.

Inferential Statistics

Inferential statistics is critical in statistical analysis in quantitative research. This approach uses statistical tests to draw conclusions about the population. Examples of inferential statistics include t-tests, F-tests, ANOVA, p-value, Mann-Whitney U test, and Wilcoxon W test. This

Common Statistical Analysis in Research Types

Although inferential and descriptive statistics can be classified as types of statistical analysis in research, they are mostly considered analytical methods. Types of research are distinguishable by the differences in the methodology employed in analyzing, assembling, classifying, manipulating, and interpreting data. The categories may also depend on the type of data used.

Predictive Analysis

Predictive research analyzes past and present data to assess trends and predict future events. An excellent example of predictive analysis is a market survey that seeks to understand customers’ spending habits to weigh the possibility of a repeat or future purchase. Such studies assess the likelihood of an action based on trends.

Prescriptive Analysis

On the other hand, a prescriptive analysis targets likely courses of action. It’s decision-making research designed to identify optimal solutions to a problem. Its primary objective is to test or assess alternative measures.

Causal Analysis

Causal research investigates the explanation behind the events. It explores the relationship between factors for causation. Thus, researchers use causal analyses to analyze root causes, possible problems, and unknown outcomes.

Mechanistic Analysis

This type of research investigates the mechanism of action. Instead of focusing only on the causes or possible outcomes, researchers may seek an understanding of the processes involved. In such cases, they use mechanistic analyses to document, observe, or learn the mechanisms involved.

Exploratory Data Analysis

Similarly, an exploratory study is extensive with a wider scope and minimal limitations. This type of research seeks insight into the topic of interest. An exploratory researcher does not try to generalize or predict relationships. Instead, they look for information about the subject before conducting an in-depth analysis.

The Importance of Statistical Analysis in Research

As a matter of fact, statistical analysis provides critical information for decision-making. Decision-makers require past trends and predictive assumptions to inform their actions. In most cases, the data is too complex or lacks meaningful inferences. Statistical tools for analyzing such details help save time and money, deriving only valuable information for assessment. An excellent statistical analysis in research example is a randomized control trial (RCT) for the Covid-19 vaccine. You can download a sample of such a document online to understand the significance such analyses have to the stakeholders. A vaccine RCT assesses the effectiveness, side effects, duration of protection, and other benefits. Hence, statistical analysis in research is a helpful tool for understanding data.

Sources and links For the articles and videos I use different databases, such as Eurostat, OECD World Bank Open Data, Data Gov and others. You are free to use the video I have made on your site using the link or the embed code. If you have any questions, don’t hesitate to write to me!

Support statistics and data, if you have reached the end and like this project, you can donate a coffee to “statistics and data”..

Mashable is a global, multi-platform media and entertainment company. For more queries and news contact us on this: mail: mashablepartners@gmail. com

Copyright © 2024 Statistics and Data

What Is Statistical Analysis?

Statistical analysis helps you pull meaningful insights from data. The process involves working with data and deducing numbers to tell quantitative stories.

Statistical analysis is a technique we use to find patterns in data and make inferences about those patterns to describe variability in the results of a data set or an experiment.

In its simplest form, statistical analysis answers questions about:

- Quantification — how big/small/tall/wide is it?

- Variability — growth, increase, decline

- The confidence level of these variabilities

What Are the 2 Types of Statistical Analysis?

- Descriptive Statistics: Descriptive statistical analysis describes the quality of the data by summarizing large data sets into single measures.

- Inferential Statistics: Inferential statistical analysis allows you to draw conclusions from your sample data set and make predictions about a population using statistical tests.

What’s the Purpose of Statistical Analysis?

Using statistical analysis, you can determine trends in the data by calculating your data set’s mean or median. You can also analyze the variation between different data points from the mean to get the standard deviation . Furthermore, to test the validity of your statistical analysis conclusions, you can use hypothesis testing techniques, like P-value, to determine the likelihood that the observed variability could have occurred by chance.

More From Abdishakur Hassan The 7 Best Thematic Map Types for Geospatial Data

Statistical Analysis Methods

There are two major types of statistical data analysis: descriptive and inferential.

Descriptive Statistical Analysis

Descriptive statistical analysis describes the quality of the data by summarizing large data sets into single measures.

Within the descriptive analysis branch, there are two main types: measures of central tendency (i.e. mean, median and mode) and measures of dispersion or variation (i.e. variance , standard deviation and range).

For example, you can calculate the average exam results in a class using central tendency or, in particular, the mean. In that case, you’d sum all student results and divide by the number of tests. You can also calculate the data set’s spread by calculating the variance. To calculate the variance, subtract each exam result in the data set from the mean, square the answer, add everything together and divide by the number of tests.

Inferential Statistics

On the other hand, inferential statistical analysis allows you to draw conclusions from your sample data set and make predictions about a population using statistical tests.

There are two main types of inferential statistical analysis: hypothesis testing and regression analysis. We use hypothesis testing to test and validate assumptions in order to draw conclusions about a population from the sample data. Popular tests include Z-test, F-Test, ANOVA test and confidence intervals . On the other hand, regression analysis primarily estimates the relationship between a dependent variable and one or more independent variables. There are numerous types of regression analysis but the most popular ones include linear and logistic regression .

Statistical Analysis Steps

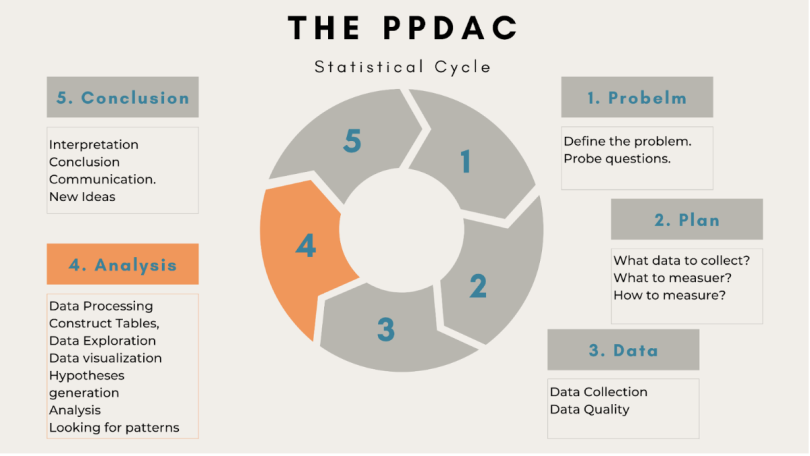

In the era of big data and data science, there is a rising demand for a more problem-driven approach. As a result, we must approach statistical analysis holistically. We may divide the entire process into five different and significant stages by using the well-known PPDAC model of statistics: Problem, Plan, Data, Analysis and Conclusion.

In the first stage, you define the problem you want to tackle and explore questions about the problem.

2. Plan

Next is the planning phase. You can check whether data is available or if you need to collect data for your problem. You also determine what to measure and how to measure it.

The third stage involves data collection, understanding the data and checking its quality.

4. Analysis

Statistical data analysis is the fourth stage. Here you process and explore the data with the help of tables, graphs and other data visualizations. You also develop and scrutinize your hypothesis in this stage of analysis.

5. Conclusion

The final step involves interpretations and conclusions from your analysis. It also covers generating new ideas for the next iteration. Thus, statistical analysis is not a one-time event but an iterative process.

Statistical Analysis Uses

Statistical analysis is useful for research and decision making because it allows us to understand the world around us and draw conclusions by testing our assumptions. Statistical analysis is important for various applications, including:

- Statistical quality control and analysis in product development

- Clinical trials

- Customer satisfaction surveys and customer experience research

- Marketing operations management

- Process improvement and optimization

- Training needs

More on Statistical Analysis From Built In Experts Intro to Descriptive Statistics for Machine Learning

Benefits of Statistical Analysis

Here are some of the reasons why statistical analysis is widespread in many applications and why it’s necessary:

Understand Data

Statistical analysis gives you a better understanding of the data and what they mean. These types of analyses provide information that would otherwise be difficult to obtain by merely looking at the numbers without considering their relationship.

Find Causal Relationships

Statistical analysis can help you investigate causation or establish the precise meaning of an experiment, like when you’re looking for a relationship between two variables.

Make Data-Informed Decisions

Businesses are constantly looking to find ways to improve their services and products . Statistical analysis allows you to make data-informed decisions about your business or future actions by helping you identify trends in your data, whether positive or negative.

Determine Probability

Statistical analysis is an approach to understanding how the probability of certain events affects the outcome of an experiment. It helps scientists and engineers decide how much confidence they can have in the results of their research, how to interpret their data and what questions they can feasibly answer.

You’ve Got Questions. Our Experts Have Answers. Confidence Intervals, Explained!

What Are the Risks of Statistical Analysis?

Statistical analysis can be valuable and effective, but it’s an imperfect approach. Even if the analyst or researcher performs a thorough statistical analysis, there may still be known or unknown problems that can affect the results. Therefore, statistical analysis is not a one-size-fits-all process. If you want to get good results, you need to know what you’re doing. It can take a lot of time to figure out which type of statistical analysis will work best for your situation .

Thus, you should remember that our conclusions drawn from statistical analysis don’t always guarantee correct results. This can be dangerous when making business decisions. In marketing , for example, we may come to the wrong conclusion about a product . Therefore, the conclusions we draw from statistical data analysis are often approximated; testing for all factors affecting an observation is impossible.

Recent Big Data Articles

- Basics of Research Process

- Data & Statistics

- What Is Statistical Analysis: Types, Methods, Steps & Examples

- Speech Topics

- Basics of Essay Writing

- Essay Topics

- Other Essays

- Main Academic Essays

- Research Paper Topics

- Basics of Research Paper Writing

- Miscellaneous

- Chicago/ Turabian

- Methodology

- Admission Writing Tips

- Admission Advice

- Other Guides

- Student Life

- Studying Tips

- Understanding Plagiarism

- Academic Writing Tips

- Basics of Dissertation & Thesis Writing

- Essay Guides

- Research Paper Guides

- Formatting Guides

- Admission Guides

- Dissertation & Thesis Guides

What Is Statistical Analysis: Types, Methods, Steps & Examples

Table of contents

Use our free Readability checker

Statistical analysis is the process of analyzing data in an effort to recognize patterns, relationships, and trends. It involves collecting, arranging and interpreting numerical data and using statistical techniques to draw conclusions.

Statistical analysis in research is a powerful tool used in various fields to make sense of quantitative data. Numbers speak for themselves and help you make assumptions on what may or may not happen if you take a certain course of action.

For example, let's say that you run an ecommerce business that sells coffee. By analyzing the amount of sales and the quantity of coffee produced, you can guess how much more coffee you should manufacture in order to increase sales.

In this blog by dissertation services , we will explore the basics of statistical analysis, including its types, methods, and steps on how to analyze statistical data. We will also provide examples to help you understand how statistical analysis methods are applied in different contexts.

What Is Statistical Analysis: Definition

Statistical analysis is a set of techniques used to analyze data and draw inferences about the population being studied. It involves organizing data, summarizing key patterns , and calculating the probability that observations could have occurred randomly. Statistics help to test hypotheses and determine the link between independent and dependent variables .

It is widely used to optimize processes, products, and services in various fields, including:

- Social sciences, etc.

The ultimate goal of statistical analysis is to extract meaningful insights from data and make predictions about causal relationships. It can also allow researchers to make generalizations about entire populations.

Types of Statistical Analysis

In general, there are 7 different types of statistical analysis, with descriptive, inferential and predictive ones being the most commonly used.

- Summarizes data in tables, charts, or graphs to help you find patterns.

- Includes calculating averages, percentages, mean, median and standard deviation.

- Draws inferences from a sample and estimates characteristics of a population, generalizing insights from a smaller group to a larger one.

- Includes hypothesis testing and confidence intervals.

- Uses data to oversee future trends and patterns.

- Relies on regression analysis and machine learning techniques.

- Uses data to make informed decisions and suggest actions.

- Comprises optimization models and network analysis.

- Investigates data and discovers relationships between variables.

- Requires cluster analysis, principal component analysis, and factor analysis.

- Examines the effect of one or more independent variables on a dependent variable.

- Implies experiments, surveys, and interviews.

- Studies how different variables interact and affect each other.

- Includes mathematical models and simulations.

What Are Statistics Used for?

People apply statistics for a variety of purposes across numerous fields, including research, business and even everyday life. Researchers most frequently opt for statistical methods in research in such cases:

- To scrutinize a dataset in experimental and non-experimental research designs and describe the core features

- To test the validity of a claim and determine whether occurring outcomes are due to an actual effect

- To model a causal connection between variables and foresee potential links

- To monitor and improve the quality of products or services by spotting trends

- To assess and manage potential risks.

As you can see, we can avail from statistical analysis tools in literally any area of our life to interpret our surroundings and observe tendencies. Any assumptions that we make after studying a sample can either make or break our research efforts. And a meticulous statistical analysis will ensure that you are making the best guess.

Statistical Analysis Methods

There is no shortage of statistical methods and techniques that can be exercised to make assumptions. When done right, these methods will streamline your research and enlighten you with meaningful insights into the correlation between various factors or processes.

As a student or researcher, you will most likely deal with the following statistical methods of data analysis in your studies:

- Mean: average value of a dataset.

- Standard deviation: measure of variability in data.

- Regression: predicting one variable based on another.

- Hypothesis testing: statistical testing of a hypothesis.

- Sample size: number of individuals to be observed.

Let's discuss each of these statistical analysis techniques in more detail.

Imagine that you need to figure out the standard value in a set of numbers. Mean is a common type of statistical research methods that gives a measure of the average value.

The mean value is calculated by summing up all data points and then dividing it by the number of individuals. It's a useful method for exploratory analysis as it shows how much of the data fall close to the average.

You want to calculate the average age of 500 people working in your enterprise. You would add up the ages of all 500 people and divide by 500 to calculate the mean age: (25+31+27+28+34...)/500=27.

Standard Deviation

Sometimes, you will need to figure out how your data is distributed. That's where a standard deviation comes in! The standard deviation is a statistical method that gives a clue of how far your data is located from the average value (mean).

A higher standard deviation indicates that the data is more spread out from the mean, while a lower standard deviation indicates that the data is more tightly clustered around the mean.

Let's take the same example as above and calculate how much the ages fluctuate from the average value, which is 27. You would subtract each age from the mean and then square the result. Then you add up all results and divide them by 500 (the number of individuals). You would end up with the standard deviation of your data set.

Regression is one of the most powerful types of statistical methods, as it allows you to make accurate predictions based on existing data. It showcases the link between two or more variables and allows you to estimate any unknown values. By using regression, you can measure how one factor impacts another one and forecast future values of the dependent variable.

You want to predict the price of a house based on its size. You would retrieve details on the size and price of several houses in a given district. You would then use regression analysis to determine if the size affects pricing. After recognizing a positive correlation between variables, you could then develop an equation that will allow to prognose the price of a house based on its size.

Hypothesis Testing

Hypothesis testing is another statistical analysis tool which allows you to ascertain if your assumptions hold true or not. By conducting tests, you can prove or disprove your hypothesis.

You are testing a new drug and would like to know if it has any effect on lowering cholesterol level. You can use hypothesis testing to compare the results of your treatment group and control group . Significant difference between results would imply that the drug can decrease cholesterol levels.

Sample Size

In order to draw reliable conclusions from your data analysis, you need to have a sample size large enough to provide you with accurate results. The size of the sample can greatly influence the reliability of your analysis, so it's important to decide on the right number of individuals.

You want to conduct a survey about customer satisfaction in your business. The sample size should be broad enough to offer you representative results. You would need to question as many clients as possible to obtain insightful information.

These are just a few examples of statistical analysis and its methods. By using them wisely, you will be able to make accurate verdicts.

Statistical Analysis Process

Now that you are familiar with the most essential methods and tools for statistical analysis, you are ready to get started with the process itself. Below we will explain how to perform statistical analysis in the right order. Stick to our detailed steps to run a foolproof study like a professional statistical analyst.

1. Prepare Your Hypotheses

Before you start digging into the numbers, it's important to formulate a hypothesis .

Generally, there are two types of hypotheses that you will need to divide – a null hypothesis and an alternative hypothesis. The null assumption implies that the studied phenomenon is not true, while the alternative one suggests that it’s actually true.

First, detect a research question or problem that you want to investigate. Then, you should build 2 opposite statements that outline the relationship between variables in your study.

For example if you want to check how some specific exercise influences a person's resting heart rate, your hypotheses might look like this:

Null hypothesis: The exercise has no effect on resting heart rate. Alternative hypothesis: The exercise reduces resting heart rate.

2. Collect Data

Your next step in conducting statistical data analysis is to make sure that you are working with the right data. After all, you don't want to realize that the information you obtained doesn't fit your research design .

To choose appropriate data for your study, keep a few key points in mind. First, you'll want to identify a trustworthy data source. This could be data from the primary source – a survey, poll or experiment you've conducted, or the secondary source – from existing databases, research articles, or other scholarly publications. If you are running an authentic research, most likely you will need to organize your own experimental study or survey.

You should also have enough data to work with. Decide on an adequate sample size or a sufficient time period. This will help make your data analysis applicable to broader populations.

As you're gathering data , don't forget to check its format and accessibility. You'll want the data to be in a usable form, so you might need to convert or aggregate it as needed.

Sampling Techniques for Data Analysis

Now, let's ensure that you are acquainted with the sampling methods. In general, they fall into 2 main categories: probability and non-probability sampling.

If you are performing a survey to investigate the shopping behaviors of people living in the USA, you can use simple random sampling. This means that you will randomly select individuals from a larger population.

3. Arrange and Clean Your Data

The information you retrieve from a sample may be inconsistent and contain errors. Before doing further manipulations, you will need to preprocess data. This is a crucial step in the process of statistical analysis as it allows us to prepare information for the next step.

Arrange your data in a logical fashion and see if you can detect any discrepancies. At this stage, you will need to look for potential missing values or duplicate entries. Here are some typical issues researchers deal with when digesting their data for a statistical study:

- Handling missing values Sometimes, certain entries might be absent. To fix this, you can either remove the entries with missing values or fill in the blanks based on already available data.

- Transforming variables In some cases, you might need to change the way a variable is measured or presented to make it more suitable for your data analysis. This can involve adjusting the scale of the variable or making its distribution more "normal."

- Resampling data Resampling is a technique used to alter data organization, like taking a smaller sample from a larger dataset or rearranging data points to create a new sample. This way, you will be able to enhance the accuracy of your analysis or test different scenarios.

Once your data is shovel-ready, you are ready to select statistical tools for data analysis and scrutinize the information.

4. Perform Data Analysis

Finally, we got to the most important stage – conducting data analysis. You will be surprised by the abundance of statistical methods. Your choice should largely depend on the type and scope of your research proposal or project. Keep in mind that there is no one-size-fits-all approach and your preference should be tailored to your particular research objective.

In some cases, descriptive statistics may be sufficient to answer the research question or hypothesis. For example, if you want to describe the characteristics of a population, such as the average income or education level, then descriptive statistics alone may be appropriate.

In other cases, you may need to use both descriptive and inferential statistics. For example, if you want to compare the means of 2 or more groups, such as the average income of men and women, then you would need to develop predictive models using inferential statistics or run hypothesis tests.

We will go through all scenarios so you can pick the right statistical methods for your specific instance.

Summing Up Data With Descriptive Statistics

To perform efficient statistical analysis, you need to see how numbers create a bigger picture. Some patterns aren't apparent from the first glance and may be hidden deep in raw data.

That's why your data should be presented in a clear manner. Descriptive statistics is the best way to handle this task.

Using Graphs

Your departure point is categorizing your information. Divide data into logical groups and think how to further visualize it. There are various graphical methods to uncover patterns:

- Bar charts: present relative frequencies of different groups

- Line charts: demonstrate how different variables change over time

- Scatter plots: show the connection between two variables

- Histograms: enable to detect the shape of data distribution

- Pie charts: provide visual representation of relative frequencies

- Box plots: help to identify significant outliers.

Imagine that you are analyzing the relationship between a person's age and their income. You have collected data on the age and income of 50 individuals, and you want to confirm if there is any relationship. You decide to use a scatter plot with age on x-axis and income on y-axis. When you look at the scatter plot, you might notice that there is a general trend of income increasing with age. This might indicate that older individuals tend to have higher incomes. However, there may be some variation in data, with some people having higher or lower incomes than you expected.

Calculating Averages

Based on how your data is distributed, you will need to calculate your averages, otherwise known as measures of central tendency. There are 3 methods allowing to analyze statistical data:

- Mean: useful when data is normally distributed.

- Median: a better measure in data sets with extreme outliers.

- Mode: handy when looking for the most common value in a data set.

Assessing Variability

In addition to measures of central tendency, statistical analysts often want to assess the spread or variability of their data. There are several measures of variability popular in statistical analysis:

- Range: Difference between the maximum and minimum values.

- Interquartile range (IQR): Difference between the 75th percentile and 25th percentile.

- Standard deviation: Measure of how widely values are dispersed from the mean.

- Variance: Measure of how far a set of numbers is spread out.

While range is the simplest one, it can be influenced by extreme values. The variance and standard deviation require additional calculations, but they are more robust in terms of showing the distance of each data point from the mean.

Testing Hypotheses with Inferential Statistics

After conducting descriptive statistics, researchers can use inferential statistics to build assumptions about a larger population.

One common method of inferential statistics is hypothesis testing. This involves determining the probability that the null hypothesis is correct. If the probability is low, the null hypothesis can be denied and the alternative hypothesis is accepted. When testing hypotheses, it is important to pick the appropriate statistical test (test statistic or p value) and consider factors such as sample size, statistical significance, and effect size.

Researchers test whether a new medication is effective at treating a medical condition by randomly assigning patients to a treatment group and a control group. They measure the outcome of interest and use a t-test to determine whether the medication is effective. As a result of calculation, researchers reveal that their t-value is less than the critical value. This indicates that the difference between the treatment and control groups is not statistically significant and the null hypothesis cannot be denied. As a result, researchers can conclude that the new medication is not effective at treating this medical condition.

Another method of inferential statistics is confidence intervals, which estimate the range of values that the true population parameter is likely to fall within.

If certain conditions for variables are satisfied, you can draw statistical inference using regression analysis. This technique helps researchers devise a scheme of how variables are interconnected in a study. There are different types of regression depending on the variables you're working with:

- Linear regression: used for predicting the value of a continuous variable.

- Logistic regression: chosen if scientists work with categorical data.

- Multiple regression: used to determine the relationship between several independent variables and a single outcome variable.

As you can see, there are various approaches in statistical analytics. Depending on the kind of data you are processing, you have to choose the right type of statistical analysis.

5. Interpret the Outcomes

After conducting the statistical analysis, it is important to interpret the results. This includes determining whether a hypothesis was accepted or rejected. If the hypothesis is accepted, it means that the data supports the original claim. You should further assess if data followed any patterns, and if so, what those patterns mean.

It is also important to consider any errors that could have occurred during the analysis, such as measurement error or sampling bias. These errors can affect your results and can lead to incorrect interpretations if not accounted for.

Make sure you communicate the results effectively to others. This may involve creating reports, or giving a presentation to other members of your research team. The choice of format for presenting the results will depend on the intended audience and the goals of your statistical analysis. You may also need to check the guidelines of any specific paper format you are working with. For example, if you are writing in APA style , you might need to learn more about reporting statistics in APA .

After conducting a regression analysis, you found that there is a statistically significant positive relationship between the number of hours spent studying and the exam scores. Specifically, for every additional hour of studying, the exam score increased by an average of 5 points (β = 5.0, p < 0.001). Based on these results, you can conclude that the more time students spend studying, the higher their exam scores tend to be. However, it's important to note that there may be other factors that could also be influencing the exam scores, such as prior knowledge or natural ability. Therefore, you should account for these confounding variables when interpreting the results.

Benefits of Statistical Analysis

Statistics in research is a solid instrument for understanding numerical data in a quantitative study . Here are some of the key benefits of statistical analysis:

- Identifying patterns and relationships

- Testing hypotheses

- Making assumptions and forecasts

- Measuring uncertainty

- Comparing data.

Statistics Drawbacks

Statistical analysis can be powerful and useful, but it also has some limitations. Some of the key cons of statistics include:

- Reliance on data accuracy and quality

- Inability to provide complete explanations for results

- Chance of incorrect interpretation or application of results

- Need for specialized knowledge or software

- Complexity of analysis.

Bottom Line on Statistical Analysis

Statistical analysis is an essential tool for any researcher, scientist, or student who are coping with quantitative data. However, accuracy of data is paramount in any statistical analysis – if the data fails, then the results can be misleading. Therefore, you should be aware of how to do statistics and account for potential errors to obtain dependable results.

Entrust your task to proficient academic writers and have your project done quickly and efficiently. Whether you need research paper or thesis help , you can rely on our experts 24/7.

FAQ About Statistics

1. what is a statistical method.

A statistical method is a set of techniques used to analyze data and draw conclusions about a population. Statistical methods involve using mathematical formulas, models, or algorithms to summarize data and investigate causal relationships. They are also utilized to estimate population parameters and make predictions.

2. What is the importance of statistical analysis?

Statistical analysis is important because it allows us to make sense of data and draw conclusions that are supported by evidence, rather than relying solely on intuition. It helps us to understand the relationships between variables, test hypotheses and make predictions, which can further drive progress in various fields of study. Additionally, statistical analysis can provide a means of objectively evaluating the effectiveness of interventions, policies, or programs.

3. How can I ensure the validity of my statistical analysis results?

To ensure the validity of statistical analysis results, it's essential to use techniques that are appropriate for your research question and data type. Most statistical methods assume certain conditions about the data. Verify whether the assumptions are met before applying any method. Outliers can also significantly affect the results of statistical analysis. Remove them if they are due to data entry errors, or analyze them separately if they are legitimate data points.

4. What is the difference between statistical analysis and data analysis?

Statistical analysis is a type of data analysis that uses statistical methods, while data analysis is a broader process of examining data using various techniques. Statistical analysis is just one tool used in data analysis.

Joe Eckel is an expert on Dissertations writing. He makes sure that each student gets precious insights on composing A-grade academic writing.

You may also like

Start Learning

Data Science

Future Tech

IIT Courses

Accelerator Program in

Business Analytics and Data Science

In collaboration with

Certificate Program in

Financial Analysis, Valuation, & Risk Management

DevOps & Cloud Engineering

Strategic Management and Business Essentials

Statistical Analysis: Definition, Types, Importance & More

Updated on November 8, 2024

Statistics is another technique of studying data to ascertain relative trends, relationships and patterns. They use and believe in it, and their belief implies that it has valuable solutions for researchers, governments, businesses, and organisations to generate sensible results from the numbers. Therefore, statistical analysis requires adequate planning to make dependable recommendations. This refers to the choice of objectives, the formulation of your study questions and the choice of sample and sampling techniques.

After collecting data, one may aggregate and categorise the data with simple statistics, then use more advanced statistics to forecast the results on the entire population sample. Finally, the objective is to analyse and report the results to others. This guide introduces statistical analysis for students and researchers, showing each step through two examples: one is used to establish whether there is a direct correlation between two events and the other is used to determine the relationship between two factors.

Statistical Analysis Definition

The term statistical analysis refers to the collection and study of data to uncover patterns and trends. Using numbers also helps to remove bias, making data easier to understand. This approach can be applied to help us understand research results, create models, and plan studies or surveys.

Statistical analysis is important in AI and machine learning because we use large data sets. We can organise them by common patterns and turn data into valuable information. In short, statistical analysis is a way to turn raw, unorganised data into something useful.

Statistical analysis can help businesses and organisations predict future trends using past data. It is the science of dealing with data to try to draw conclusions that can be read, used to inform, or used to help develop a plan.

Steps of Statistical Analysis

A set of steps in statistical analysis transforms raw data to create meaningful insight. Here’s a breakdown of the key steps:

- Define the Data: First, you need to know what kind and how the data is. This includes data sources, especially the types of variables and formats of collecting data that can contribute to true analysis.

- Relate Data to the Population: Compare your sample data to the population it relates to. This step enables us to determine whether our sample truly reflects the entire population for which we are conducting this study, which is very important when we want to draw valid conclusions.

- Build a Model: Statistical modelling attempts to create a statistical model that describes the relationship between different variables in your data. This model removes the complexity from the data and simplifies it so that the patterns and trends of interest to the population are easier to see.

- Validate the Model: Check how your model explains the data—check its accuracy. This step is often hypothesis testing, which checks the model assumptions to ensure the results are trustworthy and valid.

- Use Predictive Analytics: Finally, use predictive method to predict future outcomes. This step runs scenarios that help organisations and researchers make informed decisions and develop action plans responsive to anticipated future trends.

Also Read: Statistics for Data Science

Types of Statistical Analysis

There are several variations of statistical analysis, each aimed at doing different things and doing them differently. Here are the six main types:

Descriptive Analysis

The descriptive analysis collects, interprets, and summarises data in an easily understandable way. It delivers data in charts, graphs, and tables without concluding anything.

Inferential Analysis

Inferential analysis involves making predictions or inferences from a sample of data to infer what it might be like in a larger population. It finds relationships with variables and makes it possible to generalise findings from a smaller sample to a larger group.

Predictive Analysis

Predictive analysis uses past data to predict the future. It uses tools like machine learning, data mining, and statistical modelling to spot and predict a trend using historical data.

Prescriptive Analysis

Prescriptive analysis goes even further by recommending actions based on data insights. This type of analysis allows for making informed decisions based on suggestions of the best course of action that will naturally arise and result from the analysis.

Exploratory Data Analysis

Here, exploratory data analysis considers potential relationships in data with no expectations. It looks for unknown patterns and connections, which might help you see insights that might not seem obvious.

Causal Analysis

Causal analysis tries to find relationships between cause and effect. It helps explain why things happen and how they affect others to understand why outcomes occur, like in a business performance analysis.

Importance of Statistical Analysis

Statistics is a simple way to simplify complex data to make informed decisions in many fields. Here are some key reasons why statistical analysis is so valuable:

- Simplifies Large Data Sets: Statistical analysis organises huge quantities of data into easily understood and manageable pieces so you can make more sense of an overwhelming amount of information (and derive more meaning from it).

- Supports Research and Experiment Design: Statistical methods are required to plan effective laboratory, field, and survey studies and obtain accurate, reliable results.

- Enables Strategic Planning: Developing well-founded plans for any field of study based on statistical analysis is very helpful to research and decision-making.

- Helps Make Predictions and Generalizations: However, researchers have used statistical techniques to forecast outcomes and identify trends in diverse fields, from economics to climate science.

- Broad Application Across Fields: Statistical methods are used in almost every field, from physical and biological sciences (like genetics) to business, social sciences, and public health.

- Used by Various Professionals: Statistical analysis is used by business managers, researchers, and manufacturers who base their decisions on the information they obtain to improve their operations.

- Assists Government and Business Administration: Administrators use statistics to create and modify policies, use data trends to develop and implement policies, allocate resources, and judge program effectiveness.

- Supports Political and Social Insights: Politicians and policymakers use statistics to argue points, assuage public fears, and determine policy effectiveness.

Common Software for Statistical Analysis

Often, statistical analysis involves working with large datasets — that would be too tough to handle by hand. Luckily, there is software dedicated to making big data analysis efficient and accurate. Learning these tools will help you improve, making you a desirable employee and good for working on tough projects.

In descriptive and inferential analysis, statistical software is important. It can visualise (create charts and graphs) or do complex calculations to find reasonable conclusions from data. While the software you use may vary by job, here are some of the most commonly used tools in statistical analysis:

- SAS: SAS is widely appreciated for its capability to handle and analyse data, perform precise predictive analytics steps, and produce other detailed statistics.

- R: R is arguably one of the most recommended open-source technologies for computing, graphical, and statistical data analysis. It is widely used in research and academia.

- SPSS: SPSS is frequently used in the social sciences as a basic application for analysing surveys and visualising the results.

- Minitab: Minitab is commonly used in quality improvement projects. It offers user-friendly methods of statistical analysis for manufacturing and business.

- Stata: Stata has been publicly used in economics, biostatistics, and political sciences. It operates on elaborate data formats and provides statistical information.

Statistical Analysis Methods

Many methods used in statistical analysis play their roles in interpreting the data. Here are five of the most commonly used techniques:

1. Mean

A straightforward way to find a dataset’s central tendency is with the mean or average. The trend is an average of all the data points added up and then divided by the number of data points, the mean. While I use the mean, sometimes it may be inaccurate if the data has outliers.

2. Standard Deviation

It tells you how spread out your data points are from the mean. The advantage is knowing whether results can be generalised and within data variability. The smaller the standard deviation, the closer the standard deviation data are to the mean, and the larger the standard deviation, the greater the variability.

3. Regression

Regression analysis tries to find the relationship between variables (or the cause-and-effect relationship between an independent and dependent variable). This is why it is so commonly used: to predict future events given existing trends.

4. Hypothesis Testing

Hypothesis testing uses sample data to determine whether a hypothesis or assumption is valid. With an initial assumption, researchers start with the data and try to confirm or refute it, adding rigour to research conclusions.

5. Sample Size Determination

Sample size determination or sampling involves selecting a subset of an entire population to represent it. It’s especially useful for analysing big data. Research objectives determine which common sampling techniques will be used, such as random, convenience, or snowball sampling.

Statistical Analysis Example

To demonstrate statistical analysis, let’s calculate the standard deviation of a set of test scores.

Suppose we have five test scores:

- Calculate the Mean:

Mean = (15 + 10 + 8 + 12 + 5) / 5 = 50 / 5 = 10

- Calculate the Average of the Squared Differences:

Sum of squares = (25 + 0 + 4 + 4 + 25) / 5 = 58 / 5 = 11.6

- Find the Sample Variance:

Sample Variance = 11.6

- Calculate the Standard Deviation:

Standard Deviation = √11.6 ≈ 3.41

In this example, the standard deviation of 3.41 shows the average spread of test scores around the mean score of 10. This measure helps in understanding the variability of scores.

Career in Statistical Analysis

There are lots of options available in industries for a career in statistical analysis. On average, a Statistical Analyst has a solid familiarity with statistics, data analytics, or some associated areas like maths or Computer Science.

As Data Analysts, they enter and work with data to discover trends and patterns used to make decisions. If you’re aiming for more senior jobs such as Data Scientist, being able to code in languages such as Python or R and learning data visualisation tools (like Tableau) are essential. As you move on, creating predictive models and using machine learning techniques have become advanced statistical analysis skills.

Senior Data Analyst and Data Scientist roles with this kind of career growth require leadership roles on projects or teams, strategy development, and vast amounts of complex analyses. As skill grows, we would choose to hang out with just one or two areas: financial analytics, health data, and consumer behaviour, all with their own challenges and payoffs.

No matter if you are starting up your Data Analyst journey or hope to hold leadership positions in the future, mastering the usage of various statistical tools and knowing the distinct data are fundamental to a career in statistical analysis.

Statistical analysis is a powerful thing that gives raw data a meaningful shape, allowing individuals and organisations to make informed decisions. Statistical analysis uses many methods and software to discover trends, set relationships, and give a basis for trustworthy predictions. It is essential to analyse data with accuracy and insight, whether in business, research, social sciences or government. Because we have more data than ever, it is becoming critical for us to master statistical analysis as we gather and use more data than ever on the world around us to make progress in the ever-growing field. For further assistance, refer to the Certificate Program in Financial Analysis, Valuation, & Risk Management powered by Hero Vired with edX and Columbia University.

Upskill with expert articles

Accelerator Program in Business Analytics & Data Science

Integrated Program in Data Science, AI and ML

Accelerator Program in AI and Machine Learning

Advanced Certification Program in Data Science & Analytics

Certification Program in Data Analytics

Certificate Program in Full Stack Development with Specialization for Web and Mobile

Certificate Program in DevOps and Cloud Engineering

Certificate Program in Application Development

Certificate Program in Cybersecurity Essentials & Risk Assessment

Integrated Program in Finance and Financial Technologies

Certificate Program in Financial Analysis, Valuation and Risk Management

Certificate Program in Strategic Management and Business Essentials

Executive Program in Product Management

Certificate Program in Product Management

Certificate Program in Technology-enabled Sales

Certificate Program in Gaming & Esports

Certificate Program in Extended Reality (VR+AR)

Professional Diploma in UX Design

© 2024 Hero Vired. All rights reserved

IMAGES

VIDEO

COMMENTS

A Simplified Definition. Statistical analysis uses quantitative data to investigate patterns, relationships, and patterns to understand real-life and simulated phenomena. The approach is a key analytical tool in various fields, including academia, business, government, and science in general. This statistical analysis in research definition ...

Statistical analysis is useful for research and decision making because it allows us to understand the world around us and draw conclusions by testing our assumptions. Statistical analysis is important for various applications, including: Statistical quality control and analysis in product development. Clinical trials.

Statistical analysis is the process of collecting and analyzing large volumes of data in order to identify trends and develop valuable insights. In the professional world, statistical analysts take raw data and find correlations between variables to reveal patterns and trends to relevant stakeholders. Working in a wide range of different fields ...

Statistics is a set of methods used to analyze data. The statistic is present in all areas of science involving the. collection, handling and sorting of data, given the insight of a particular ...

Statistical analysis takes the raw data and provides insights into what the data mean. This process can improve understanding of the subject area by testing hypotheses, producing actionable results leading to improved outcomes, and making predictions, amongst many others. Statistical analysis can help you understand quantitative research ...

Statistical programming - From traditional analysis of variance and linear regression to exact methods and statistical visualization techniques, statistical programming is essential for making data-based decisions in every field. Econometrics - Modeling, forecasting and simulating business processes for improved strategic and tactical planning.

The Beginner's Guide to Statistical Analysis | 5 Steps & Examples. Statistical analysis means investigating trends, patterns, and relationships using quantitative data. It is an important research tool used by scientists, governments, businesses, and other organizations. To draw valid conclusions, statistical analysis requires careful planning ...

Statistical analysis is the collection and interpretation of data in order to uncover patterns and trends. It is a component of data analytics. Statistical analysis can be used in situations like gathering research interpretations, statistical modeling or designing surveys and studies. It can also be useful for business intelligence ...

Statistical analysis is the process of analyzing data in an effort to recognize patterns, relationships, and trends. It involves collecting, arranging and interpreting numerical data and using statistical techniques to draw conclusions. Statistical analysis in research is a powerful tool used in various fields to make sense of quantitative data.

The term statistical analysis refers to the collection and study of data to uncover patterns and trends. Using numbers also helps to remove bias, making data easier to understand. This approach can be applied to help us understand research results, create models, and plan studies or surveys.