Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 25 October 2024

Education in the AI era: a long-term classroom technology based on intelligent robotics

- Francisco Bellas ORCID: orcid.org/0000-0001-6043-1468 1 ,

- Martin Naya-Varela 1 ,

- Alma Mallo 1 &

- Alejandro Paz-Lopez 1

Humanities and Social Sciences Communications volume 11 , Article number: 1425 ( 2024 ) Cite this article

1676 Accesses

3 Altmetric

Metrics details

- Science, technology and society

Artificial Intelligence (AI) will have a major social impact in the coming years, affecting today’s professions and our daily routines. In the short-term, education is one of the most impacted areas. The autonomous decision making that can be achieved with tools based on AI implies that some of the traditional methodologies associated with the fundamentals of the learning process in students, must be reviewed. Consequently, the role of teachers in the classroom may change, as they will have to deal with such AI tools performing parts of their work, and with students making a common use of them. In this scope, the AI in Education (AIEd) community agrees on the key relevance of developing AI literacies to train teachers and students of all educational levels in the fundamentals of this new technological discipline, so they can understand how these tools based on AI work and pilot the adaptation in an informed way. This implies teaching students about the fundamentals of topics like perception, representation, reasoning, learning, and the impact of AI, with the aim of delivering a solid formation in this area. To support them, formal teaching and learning resources must be developed and tested with students, properly adapted to different educational levels. The main contribution of this proposal lies in the presentation of the Robobo Project, a technological tool based on intelligent robotics that supports such formal AI literacy training for a wide range of ages, from secondary school to higher education. The core part of this paper is focused on showing the possibilities the Robobo Project offers to teachers in a simple way, and how it can be adapted to different levels and skills, leading to a long-term educational proposal. Validation results that support the feasibility of this technology in the education about AI, obtained with students and teachers in different educational levels during a period of six years, are presented and discussed.

Similar content being viewed by others

Development of an artificial intelligence curriculum design for children in Taiwan and its impact on learning outcomes

Exploring the impact of artificial intelligence on higher education: The dynamics of ethical, social, and educational implications

RETRACTED ARTICLE: The impact of augmented reality on student attitudes, motivation, and learning achievements—a meta-analysis (2016–2023)

Introduction.

The Artificial Intelligence in education (hereafter referred to as AIEd) community agrees on the key relevance of developing an AI literacy with the aim of training teachers and students, of all educational levels, in the fundamentals of this discipline, so they can face in an informed fashion the new technological revolution that is up to come (Global Education Monitoring Report Team 2023 ; UNESCO 2023 ).

We can find many initiatives focused on the development of such AI literacy, mainly from secondary school to higher education (Ng et al. 2021 ). In the case of higher education, two main situations can be distinguished (Laupichler et al. 2022 ; Brew et al. 2023 ; Hornberger et al. 2023 ). First, on educational programmes focused on training “AI engineers”, like computer science and similar ones, AI has been part of the syllabus for years and the core topics are clearly established in classical textbooks (Russell and Norvig 2021 ). Second, addressing university programmes in many other areas, like natural sciences or humanities, there is not a proper AI literacy as such.

The most advanced contributions for AI literacy can be found in secondary school and high school (K12 to K16) (Ng et al. 2021 ). A recent report from UNESCO has reviewed the main official K12 AI curricula developments of different countries (UNESCO 2022 ), concluding that all of them are based on similar topics, basically those included on the guidelines established in the AI4K12 USA’s initiative (AI4K12 2023 ). Based on them, AI literacy is structured around 5 big ideas: perception, natural interaction, representation & reasoning, learning, and the impact of AI. These ideas revolve around the “intelligent agent” perspective of AI, understood as computational systems situated in an environment (real or virtual) where they interact and operate in an autonomous fashion (Russell and Norvig 2021 ; Dignum et al. 2021 ). This approach implies training students, not in trending topics or specific tools like generative AI, but rather on the fundamentals of AI contained in these five ideas, from which all the AI technologies arise. Although generated in the frame of K12 education, this is the perspective towards AI literacy that should be adopted at all levels.

The goal now is how to transfer the AI literacies to the real educational centres, a key point that still has no answer. Some critical questions emerge when addressing this problem: (1) How teaching AI can fit into current education plans? (2) How can educators face teaching in this new discipline? and (3) Which educational resources can be used at different levels?

Policy makers worldwide and global organizations as UNESCO are actively working on these issues, but the heterogeneity of the educational systems and the wide spectrum of target students makes it complicated to design a common strategy. In the meantime, researchers and educators in the AIEd field have been very active on the two last questions, with the development and testing of specific classroom resources for the teacher that can fit into the five topics presented above. In this realm, machine learning is clearly the topic where the most remarkable contributions have been done, especially in the case of supervised learning (AI at code.org 2023 ; Machine Learning for Kids 2023 ; MIT 2024 ). Other initiatives like Elements of AI (The University of Helsinki 2024 ), ISTE (ISTE 2023 ) or MIT Raise (Lee et al. 2021 ) have followed a more general perspective, with resources also for the topics of representation, reasoning or the impact of AI.

However, in key topics as perception, natural interaction, or reinforcement learning (a very relevant approach in machine learning), the number of formal contributions is lower. The main reason behind this is motivated by the nature of these topics, highly coupled with the online interaction of the agent with the environment. Therefore, to properly learn about them, it is required to handle access to data in real time, dealing with uncertainty in many cases, which increases the complexity on the implementation of the teaching resources.

To face this problem, robots have shown to be a feasible solution. They clearly frame the concept of intelligent agent because they are situated and operate in the real world. Robots are continuously getting data and executing actions conditioned by the uncertainty of the environment. Moreover, the human-robot interaction (HRI) is a key field in robotics, so all the topics related to natural interaction fit also naturally. Finally, robotics is a great example of AI application field, and students clearly perceive the relevance of having autonomous robots in their lives, being interested about understanding how they can operate in such a way (Xu and Ouyang 2022 ). Of course, the use of robots in education is not new, but what is relevant in the scope of this paper is to realize to what extent they have been used in the realm of education about AI, and for what reasons.

Literature review

Thanks to the technological development and cheaper technology, robots have been extending their field of application beyond technical university levels, covering general education too. This has brought the emergence of the Educational Robotics (ER) field with its own entity, pursuing the goal of developing robots and associated resources to serve as educational tools at different grades. The characteristics of the ER, such as the application of digital technologies for problem real solving, its suitability for STEM (Science, Technology, Engineering, Mathematics) education and Project Based Learning (PBL) methodologies, or the existence of many student competitions, make this field optimal for fostering the development of the 21st century skills (Ananiadou and Claro 2009 ), especially the learning ones, called the “4Cs”: Critical thinking, Communication, Collaboration, and Creativity (Kivunja, 2015 ).

After a deep review of the literature in the scope of ER, some remarkable initiatives and platforms can be highlighted (Bezerra Junior et al. 2018 ; Anwar et al. 2019 ; Darmawansah et al. 2023 ). In kindergarten and first levels of primary school, the robots that have been used are very simple, usually with a friendly form similar to an animal, to be familiar to children and favour the HRI, like the Bee robot (Castro et al. 2018 ; Seckel et al. 2023 ) or Blue-bot (Aranda et al. 2019 ). For the highest levels of primary school and the lowest of secondary school, the KIBO Bot (Sullivan and Bers 2019 ) and the MBot robot (Sáez–López et al. 2019 ) are two representative examples of platforms, being the LEGO MINDSTORMS kit (LMkit) the most used robotic tool worldwide. Moving to high school and university levels we can find the iRobot Create (Gomoll et al. 2017 ), the NAO (Konijn and Hoorn 2020 ), the Turteblot (Amsters and Slaets 2020 ; Weiss et al. 2023 ), the Kephera IV (Farias et al. 2019 ), or different arm robots.

All the previous robots were not designed for teaching AI, so they lack of basic features required in this scope to deal with the five ideas commented above (Naya–Varela et al. 2023 ): (1) In terms of sensors, they should allow natural interaction with humans and the environment, which means including camera, microphone, and tactile sensing; (2) Support a wide range of actuations, like locomotion, manipulation, speech production (speaker) or visual communication (LCD screens); (3) Support the execution of complex algorithms, like those related to computer vision, reasoning, or machine learning; (4) Being equipped with a wide spectrum of communication technologies, like WiFi, Bluetooth, 5G or similar, and support internet connection; (5) Support different programming languages adapted to the educational levels, which allow to integrate external AI libraries or functionalities; (6) Have a simulation model, so students can work in a controlled and simple fashion before moving to the real robot, reducing also the number of physical platforms at schools and their required investment; (7) Including adapted teaching materials to face the five main AI topics, that teachers can use directly.

If we move to ER aimed for AI education (Chen et al. 2022 ; Chu et al. 2022 ), which should include the previous features, two main platforms must be highlighted. The Thymio, (Fig. 1 left) is a robotic platform that began as a tool to teach robotics to children between the ages of 6 and 12 (Mondada et al. 2017 ), and over time, has also become applicable at the university, being even used in research articles by professors and graduate students (Heinerman et al. 2015 ). It is a mobile robotic platform with two wheels and no camera, designed to be compatible with LEGO bricks. Over time new functionalities have been added to the robot, such as a Block Based Programming Language (BBPL) to control the robot (Shin et al. 2014 ), wireless communication to operate the robot in real time (Rétornaz et al. 2013 ) and a tangible programming language for the youngest students (Mussati et al. 2019 ). In addition, it offers a wide range of didactic materials, classified for languages and age range, oriented to all educational levels, but mainly focused on K12 (Thymio robot 2024 ).

Image of Thymio (left) and Fable (right) educational robots.

Fable (Fig. 1 right) is a modular robot targeted to teach robotics to children from 6 years of age (Pacheco et al. 2013 , 2015 ). It has various modules that can be joined together to form different types of robots. It allows a Learning by Doing methodology so that the student can select the morphology that best suits the challenge to be overcome. It can be programmed using BBPL, Python or Java. In addition, it has on its website teaching content grouped by concepts with various lessons (Pacheco 2024a ). Finally, this initiative has the “STEAM Lab”, where a set of combined tools for fully integrated STEM teaching, such as Fable robot modules, virtual reality glasses, 3D printers, etc. are provided (Pacheco 2024b ).

These two ER initiatives encompass most of the features commented above, but not all, as it will be discussed in section “Analysis and discussion”. As a conclusion, although the ER field has been very active in the last years for a wide spectrum of educational levels, none of the existing platforms support a clear roadmap towards AI literacy that can help teachers and educators from the AIEd community in a feasible fashion.

Methodology

Research contribution.

The current paper aims to fill the gap exposed in the previous section by proposing a technological didactical tool, called Robobo, developed within the scope of the Robobo Project (The Robobo Project 2024 ), to train AI topics at different education levels, from K12 to higher education, and aligned with the last recommendations about AI literacy. This tool is grounded on the idea of using robots for learning about AI as they perfectly represent the concept of intelligent agent, so they can be used for teaching all the core topics in this discipline. The Robobo robot contains all the hardware and software features to support such goal, and also a range of materials that can be adapted to different educational levels and skills, leading to a long-term educational proposal.

To simplify the target of this paper, the application of AI techniques to robots will be named here as Intelligent Robotics , and the following sections will be devoted with the presentation and analysis of the Robobo Project within this scope.

Theoretical basis

The intelligent agent perspective of ai.

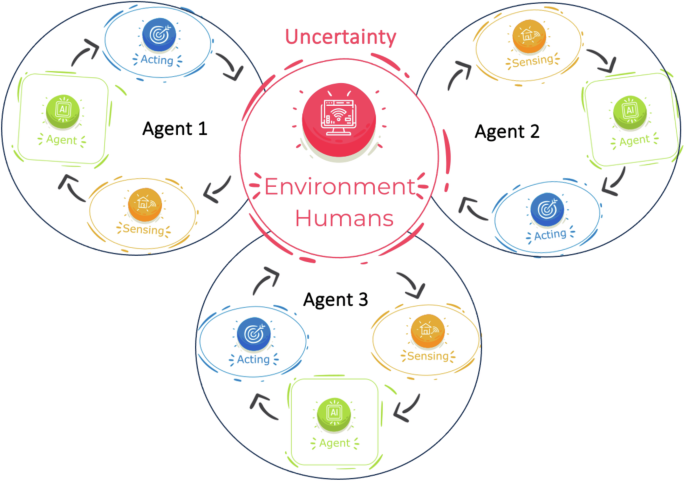

The intelligent agent perspective of AI is typically illustrated through a cycle like the one shown in Fig. 2 (green, blue, pink, and orange blocks) (Russell and Norvig 2021 ). It is based on four main elements: the environment , real or simulated, where the agent “lives” and interacts with humans; the sensing stage where it perceives information from the environment; the acting stage where it executes actions on the environment, and the agent itself, which encompasses different internal elements and processes that allow it to reach goals in an autonomous fashion. In a more general setup, this agent can be situated in an environment with other intelligent agents or even people, and it can communicate with them, so a multiagent system arises (as displayed in Fig. 2 with three agent cycles). In this scope, robots can be naturally perceived as intelligent agents, embodied, and situated in real or simulated bodies and environments (Murphy 2019 ). In addition, multirobot systems can also be easily presented to students.

The multiagent system interaction cycle.

Taking this scheme as the background approach to the type of AI perspective we aim to teach to students in the Robobo Project, the following fundamental topics have been considered: (1) Sensing, (2) Acting, (3) Representation, (4) Reasoning, (5) Learning, (6) Collective AI and (7) Ethical and legal aspects. Most of these topics are those of the AI4K12 initiative (AI4K12 2023 ), but some modifications have been done to adapt them to ER, and a new one has been included: collective AI.

Sensing the environment

Artificial agents obtain data from their sensors and from direct communication channels (see Section “Collective AI”). For the first case, students must learn the difference between sensing (information provided by the sensor) and perception (information in context), and how the properties of the real environments imply some kind of uncertainty in the perception. This is a key concept for real AI systems, as it imposes one of the classical limits of symbolic approaches (section “Introduction”). In the realm of AI literacy, the fundamentals of cameras, microphones, distance sensors and tactile screens must be covered.

Agent actions

Students must be aware of the main types of actuators and effectors that AI systems may have, such as motors, speakers, or screens, and how they are found in smartphones, autonomous cars, or videogames. The intelligent agent selects internally the actions to be applied in order to fulfil its tasks through a series of complex processes that will be later explained. Consequently, it is necessary to understand the types of actions that can be executed and their effects on the environment.

Inside the agent itself, many processes are executed, but following the five ideas, we could reduce them to three: representation, reasoning, and learning.

Knowledge representation

How digital data is stored and what is represents in the computational system that constitutes the core of the agent must be addressed. It is a new topic for most of students who do not belong to the computer science field. It encompasses one of the main issues in AI, how to represent knowledge, and the differentiation between symbolic and sub symbolic approaches. This topic encompasses learning about the representation of images, sounds, speech, and other structures like graphs or trees that make up core aspects in AI.

Reasoning and decision making

Reasoning comprises all the methods involved in action selection and decision making. It is related with computational thinking and problem solving, although it includes specific algorithms and approaches to achieve an autonomous response. Considering the objective that the agent must achieve (the task to solve), it must reason how to fulfil it in an autonomous way using the available sensorial information and the established representation. Function optimization, graph search, probabilistic reasoning or reasoning based on rules are examples of topics to be learned in this scope.

Machine learning

This is the most popular topic in AIEd, as machine learning algorithms (ML) have evolved and improved notably in the last decades. They allow intelligent agents to create models from data which can be used for decision making and prediction. There are three main types of methods in this realm that students must understand: supervised, unsupervised and reinforcement learning. Learning about these methods must be faced in a general way, because there are many new algorithms constantly arising, and it is not possible to cover them all. Thus, AI literacy should focus on general and global processes such as data capture, data preparation, algorithm selection and parameterization, training stage, testing stage, and results analysis.

ER is especially suitable for reinforcement learning teaching because the algorithms behind this approach are designed to operate in systems based on agents, interacting with the environment in a continuous cycle of state, action, and reward. This is a very relevant property of ER, because it is not simple to find practical application cases where to train reinforcement learning with students, mainly in lower educational levels.

Collective AI

The general perspective of AI system illustrated in Fig. 2 implies that individual agents are situated in an environment where they interact with other agents and people. It is important for students to understand such AI ecosystem, because in the future, we will live surrounded by intelligent agents (smart houses, buildings, or cities), performing a natural interaction with them, so the fundamentals of these global systems like communication modalities, cloud computing, cybersecurity, interface design or biometric data must be addressed in AI literacy.

Ethical and legal aspects

The ethical and legal aspects behind AI are still under study and development, but it is important to enhance a critical view of students about AI technologies that is supported by their own knowledge of AI principles, so they can have an independent and solid opinion of the autonomous decision making of these systems. Many questions arise about the type of activities that should be faced with AI or restricted, the benefits and drawbacks of the commercial use of this technology, and the central role that humans should play in this scope (Huang et al. 2023 ).

All the previous topics are relevant in the scope of AI, with enough impact to be considered as independent research areas. Depending on the educational level, it is necessary to cover them with more or less technical detail, so the bibliography that teachers must use will be different. A general recommendation is to follow classical textbooks on AI, like (Russell and Norvig 2021 ) or (Poole and Mackworth 2010 ), as the starting point to learn the fundamentals of these seven topics at all levels. Specific textbooks, adapted to pre university ones, are still under development, while specific ones for the highest levels are very common.

To sum up, within the Robobo Project, the previous seven topics have been considered to frame the AI literacy. Obviously, their particular contents have been adapted to the different education levels, as it will be explained later.

Methods and materials

To reach the research goal established in Section “Research contribution”, and considering the theoretical frame explained in Section “Theoretical basis”, the research methodology applied has been a combination of educational and engineering methods. The materials developed in this work were created in the scope of the Robobo Project, a long-term educational and technological initiative, composed of three main elements: (1) a mobile robot, (2) a set of libraries and programming frameworks, and (3) specific documentation plus tutorials to support teachers and students in AIEd.

To develop the first one, mainly hardware, a classical engineering cycle of conceptualization, design, prototyping, testing, and refining was applied during the first year of the project in 2016 (Bellas et al. 2017 ). The validation was carried out in controlled sessions with students until reliable hardware functioning was achieved. To develop the software components, the Unified Process for software development was applied. This methodology proposes the development of an iterative and incremental scheme, in which the different iterations focus on relevant aspects of the Robobo software. In each iteration, the classic phases of analysis, design, implementation, and testing were carried out, so that by the end of the process, weaknesses and performance issues that need to be addressed in the next iteration were detected. Each iteration incorporated more functionalities than the previous one until the last iteration ended with the Robobo software implementing all the functionalities (Esquivel–Barboza et al. 2020 ). The software development was mainly carried out between 2016 and 2019, although improvements have continued to be incorporated up to the present day. Finally, for the educational materials, we have followed a typical educational design research methodology. Specific teaching units and classroom activities were created and evaluated by studying the learning outcomes they provided to the students, as well as the acceptance level of teachers, in an iterative cycle of testing and improvement in real sessions carried out in formal an informal education from 2017 to 2023. To this end, quantitative and qualitative analyses were performed using surveys and direct teacher feedback, leading to a set of materials that have been validated in several cycles (Bellas et al. 2022 ).

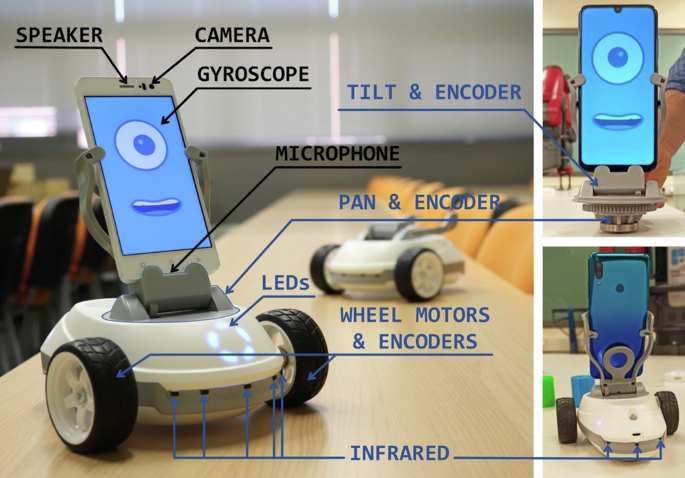

Focusing now on the explanation of the main materials used in this work, it must be pointed out that the key element of the Robobo Project is the smartphone that is used in the real platform, which provides most of the features established in Section “Theoretical basis” to support AIEd. The robotic base has a holder on its top (Fig. 3 ) to attach a standard smartphone. Communication between the smartphone and the robotic base is handled via Bluetooth. Students can use their own smartphone, which reduces the investment cost of schools and promotes a positive use of this type of device at schools, as recommended by the UNESCO (Global Education Monitoring Report Team 2023 ) Footnote 1 .

Black typeface represents those of the smartphone and blue ones those of the platform. Left: General view of the front of Robobo. Top right: Detailed view of the pan-tilt unit. Bottom right: Representation of the back of Robobo, with LEDs and infrared sensors.

Robobo: the real robot

The electronic and mechanical design of the mobile physical platform is detailed in (Bellas et al. 2017 ). The core concept behind the Robobo robot consists of combining the simple sensors and actuators of the platform with technologically advanced sensors, actuators, computational power, and connectivity the smartphone provides. From a functional perspective, the different components of the Robobo hardware (displayed in Fig. 3 ) can be classified into five categories: sensing, actuation, control, communications, and body.

Robobo sensors:

On the base :

Infrared (IR): It has 8 IR sensors, 5 in the front and 3 in the back, used for distance detection, as well as for avoiding collisions or falls from high surfaces.

Encoders: They provide the position in which the motor shaft is located. They can be utilized for odometry, to correct the trajectory of the movement, or just to realize if the robot is really moving.

Battery level: To know the degree of autonomy of the robot, useful for long-term experiments or for challenges related with energy consumption.

On the smartphone :

The characteristics of the smartphone’s sensors change depending on the model, but these are the most common:

Camera: Perhaps the most relevant Robobo sensor for teaching AIEd, due to the number of applications it supports, such as colour detection, object identification, face detection, among others.

Microphone: It allows to detect specific sounds, ambient noise, and of course, the user voice for speech recognition.

Tactile screen: This sensor allows to detect different types of touches, like tap or fling, which are typically carried out by users to allow natural HRI.

Illumination: This sensor provides the ambient light level, which is. useful in different applications to adapt the robot response according to it, or for energy saving purposes.

Gyroscope: It allows to identify the orientation of Robobo in the space. It can be used for navigation, map tracking, inclinations, and changes in the slopes.

Accelerometer: It calculates Robobo’s acceleration, identifying the real movement of Robobo even when no actuation is performed by the hardware.

GPS: It provides the global positioning of the robot using this popular technology, although it just works outdoors.

Robobo actuators

On the base:

Motors: Perhaps, they are the most relevant Robobo actuators. Two motors are attached to the wheels for navigation purpose. Other two motors are in the pan-tilt unit, enabling the horizontal and vertical rotation of the smartphone, which provides Robobo with wider range of possible movements. This is normally understood by students as “head” movements, increasing the personality of the robot expressions.

LEDs: They are utilized to transmit simple information to the user in a visual way. For instance, a warning condition when the battery is low, or a different colour depending on the distance to the walls.

On the smartphone:

The characteristics of the smartphone actuation change with the model, but again, we can find some common ones:

Speaker: It can be used to play sounds or produce speech, which is fundamental in natural interaction.

Torch: It is an adjustable light which is useful in many cases, for instance, to increase the illumination of a scenario to improve the camera response.

LCD screen: It is very useful for displaying visual information. Usually, the screen shows Robobo’s “face”, which can be changed to display different emotions.

Robobo control unit

Robobo’s control unit is the smartphone. It runs all the processes related with receiving information from the base and sending commands to the actuators through Bluetooth. In addition, it receives and sends commands to an external computer, as detailed in Section “Software and development tools”. Finally, it runs some algorithms onboard, related with image and sound processing. The computational power of the smartphone models can be very different, but most of the existing models have processors with more capability than required in most of the educational challenges, as it has been tested with students.

Robobo communication system

Current smartphones are equipped with WiFi, 5 G and Bluetooth connections. The first one is the most relevant in the educational scope, as it allows to connect the robot to the internet through the schools’ network, which is very common nowadays. As a consequence, students can use information taken from internet sources on their programs, as weather forecast, news, music, and they can also carry out direct communication by sending/receiving messages or emails.

Robobo body extensions

Finally, it must be highlighted that the Robobo base has a series of holes in its lower part to attach different types of 3D printed accessories (Fig. 4 ). Only the holes are provided to serve as structural support for the accessories, leaving the design completely free to the users, opening the possibility of multiple solutions to the challenges proposed to students while learning AIEd concepts under a STEM methodology.

Top middle: Example of 3D printed accessories. Top right and bottom: Different applications that can be performed with Robobo and the accessories, such as pushing, drawing, or even developing and outdoor version with bigger wheels.

Software and development tools

The Robobo software includes an entire ecosystem of applications, developer/user libraries, and simulators that allow easy adaptation to different learning objectives (Esquivel–Barboza et al. 2020 ). The software has been designed following a modular architecture that facilitates the addition of new capabilities in the future, as well as the configuration of which of these capabilities are available in a particular learning context. Therefore, it provides the technological foundations and functionalities that make Robobo an adaptable learning tool for different levels which is also in continuous evolution.

The core software runs on the smartphone and provides all the intrinsic sensing, actuation, and control capabilities of the robot. It also provides standard programming interfaces for local or remote access to these capabilities. Having the core of the software of the robot running on a regular smartphone allows to upgrade the hardware (the smartphone) of the robot in an almost unlimited way, leading to a long-term investment for educational institutions.

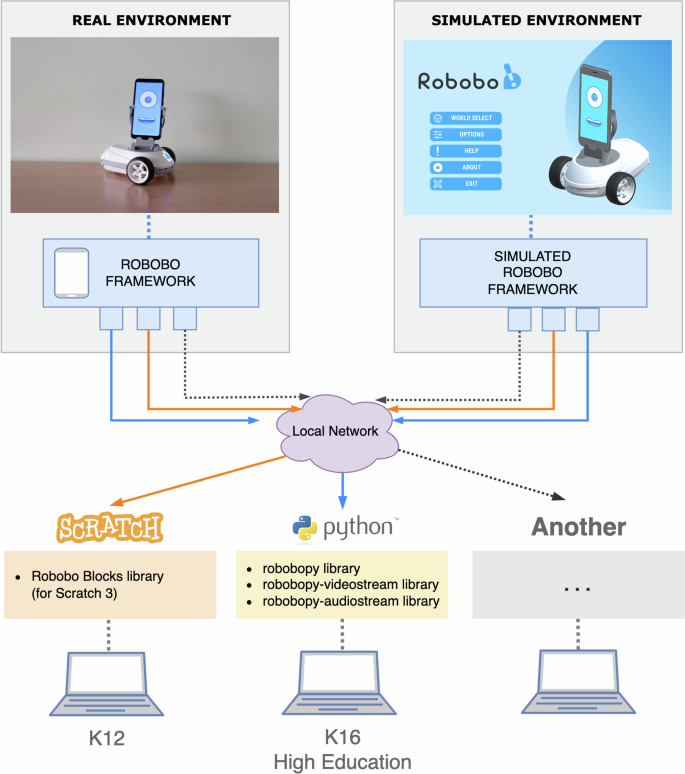

Figure 5 shows the software architecture from the perspective of a user of the Robobo platform. In this context, a user is a student who uses the robot to solve AI tasks by means of programming. However, teachers could also be considered users, who focus on designing tasks for the students (including the necessary teaching units and physical or simulated environments). As can be seen in the figure, depending on the educational level, a student can use different software libraries and programming languages to develop the challenges with Robobo. For working in the real environment, it is required to have the programming computer connected to the same local network as the robot (no cables are required). At any given time, the students can choose to run their program in the simulated environment or in the real robot. Moreover, the robot is entirely functional in a context where there is no Internet connection available (restricted local network), since even functionalities such as voice recognition have an implementation suitable for offline operation. Finally, it should also be noted that the problems to be solved usually involve a single robot interacting with its environment but several robots collaborating to solve a common task are also supported.

Representation of the software architecture of the Robobo platform from the user’s point of view.

What makes Robobo a suitable platform for long-term AIEd education are the functionalities it provides and how they have been adapted to different skills and educational levels, together with the set of teaching units designed to exploit them. A high level of semantic and conceptual homogeneity is always maintained in order to facilitate a progressive learning experience. Thus, at K12 levels, we propose the use of a programming model based on blocks, supported by Scratch, with a limited set of available functionalities that nevertheless allow experimenting with a multitude of AI topics. In the case of K16 and higher education levels, the use of the Python programming language is proposed along with a growing set of sensing, actuation and control functionalities. At this point it is important to mention that the use of Python is justified by the fact that it is currently the language of preference for data scientists and artificial intelligence developers (TIOBE Index 2024 ) and by the abundance of freely available AI libraries and tools that can be used together with the software provided by the Robobo.

Table 1 shows a simplified view of the main functionalities provided by the Robobo software platform, classified into three categories: perception (including self perception and sensing of the environment), actuation and control. As can be seen, even at the lowest level, the robotic platform provides a range of capabilities that are not usually available in other robots commonly used for educational purposes. Thus, from an early learning stage, students can explore fundamental AI concepts by creating adaptive behaviours supported by the variety of possibilities to sense the environment (through visual, acoustic, or tactile inputs) while interacting and even expressing internal emotional states (using speech, sounds or facial expressions).

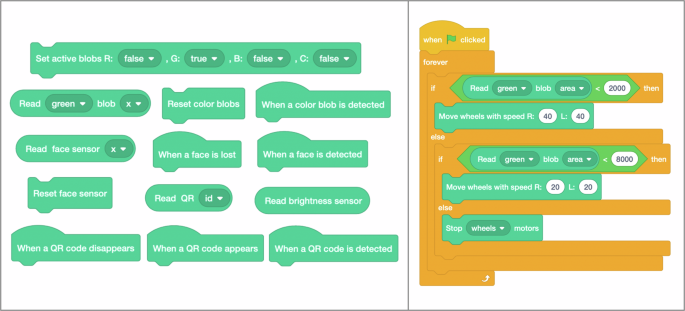

In the Scratch 3 environment, an extensive set of new blocks that allow the student to work with all available Robobo functionalities, have been defined 1 . In Fig. 6 , it is displayed a subset of programming blocks that provide access to some of the sensing capabilities, as well as a simple program that defines the speed of the robot in response to its environment (namely depending on the distance at which a green object is detected using the camera).

Left: a subset of the new programming blocks we have designed for Robobo. Right: a simple program that uses some of these new blocks together with the generic Scratch blocks.

Regarding Python language, Robobo contains advanced capabilities that allow teachers to imagine an almost unlimited number of tasks to solve in different scenarios Footnote 2 . For example, they can propose challenges in the scope of computer vision, autonomous driving, or natural interaction, which can be faced in a simple fashion. Furthermore, it is always possible for students to combine these intrinsic capabilities of Robobo with other AI technologies and tools that are common in the field and are available through external libraries.

To briefly exemplify the way of programming using the Python interface, a code fragment is included in Fig. 7 . First of all, it is necessary to import the classes that provide access to the functionalities we want to use. Likewise, if desired, any external library commonly used in the field of AI, such as TensorFlow, OpenCV, Scikit-learn, etc., can be imported. Then remote communication with the Robobo robot (in a real or simulated environment) is established. At this point it is possible to start programming the behaviour of the robot using the functions available in our Robobo.py library. It is important to clarify that if we want to change the execution of our program between the real robot and the simulator, it is only necessary to modify the line of code that establishes communication with the robot (Fig. 7 ).

Simple Python code illustrating how to program a controller for Robobo, that can be executed both using a real robot or a robot in a simulated environment.

As shown in Fig. 5 , a dedicated simulator, called RoboboSim, was developed adjusted to the necessities of schools and students Footnote 3 . It was built on top of the Unity engine, and it was designed to be easy to use, requiring minimal training. The students and teachers only need to download the application, choose one of the available virtual environments (worlds) and start a remote connection with the simulated robot, in the same way as with a real robot. The user interface requires minimal configuration, and it was designed following a video game like design aimed to be user friendly for young students. The students can solve different challenges on the available worlds using Scracth3 or Python, since both are supported by RoboboSim.

RoboboSim can be configured in two levels of realism. (1) The standard one includes basic physical modelling of the robot (weight, friction, motors). The real robot response was empirically characterized, and different models were created. Therefore, in this realism level, RoboboSim is reasonably faithful to the real robot model, and the programs developed in this level do not require much adjustment to run in the real platform. (2) The simplified realism level does not perform physical simulation, and the response is deterministic. It is recommended for those students who are starting with robotic simulations, or for those who prefer focusing on programming concepts, facilitating the accomplishment of the challenges proposed.

A key property of the RoboboSim in the realm of autonomous robotics is that it includes an optional random mode of operation. This mode implies that particular objects in the simulation appear in slightly different positions each time the environment is restarted. The purpose of this property is to force students to develop more robust programs, leading to autonomous behaviours that can adapt to changes in the environment.

Educational resources

Two main types of educational resources have been developed and tested in the last years in the scope of the Robobo Project. First, Teaching Units (TU), which include specific activities, guides, and solutions to learn about different AI topics. Second, documentation, which allows students and teachers to use the robot in a more independent fashion, adapting it to their particular needs and exploring new possibilities.

Teaching units and lessons

In the case of TU, the Robobo Project has been mainly applied to formal education. Consequently, these materials have been designed to be aligned with the latest AI literacies introduced in Section “Introduction” and including the AI topics explained in Section “Theoretical basis”, although they can be used as independent lessons in informal education, like extracurricular activities, workshops, specific AI courses or summer courses. The main target for these units has been the teachers, who are the main actors in the real introduction of AI in education.

For secondary school and high school (from 14 to 18 years old), 7 TUs based on Robobo have been developed in the scope of the AI+ educational project (Bellas et al. 2022 ), specifically focused on intelligent robotics. In higher education, more specialized teaching units have been developed for Vocational Education and Training (VET) through the AIM@VET project (Renda et al. 2024 ), and others for the different courses on intelligent robotics belonging to the University of A Coruña (Llamas et al. 2020 ). Table 2 includes a set of representative TU. It aims to provide an overall view of the type of activity that can be implemented at classes with this robot. They have been organized in a sort of incremental complexity level, although they could be adapted to increase or decrease it.

Documentation and tutorials

The Robobo Project includes a solid documentation adapted to different educational levels, including manuals, reference guides and programming examples. Table 3 shows a description of these support materials, and the links to them.

All the documentation is open access, and available at the Robobo Wiki Footnote 4 . New users should start by downloading and configuring the RoboboSim. It is recommended to try the first programs using the scratch framework, for instance, by trying the sample projects available Footnote 5 . A next step would be to try them in the real robot, which requires following the documentation about the Android app and the platform initial configuration. Finally, for those students of higher levels, the Python documentation should be reviewed. It includes a complete library reference, as well as specific documents for the most advanced features, like object recognition, lane detection, or video streaming.

Teaching methodology

The methodology used in all the TUs of the Robobo Project is based on Project Based Learning (PBL), as it best fits the STEM approach in which educational robotics have shown clear advantages. Each TU presents a challenge that students must solve with the robot, organized in teams or individually, depending on the specific learning objectives and the teacher’s criterion. The challenge faces a real problem, which must be solved in a real or simulated environment, as established by the teacher depending on the learning focus (dealing with the real robot implies using more time dealing with technical issues). To apply the PBL methodology in the Robobo TUs, five typical phases of an engineering project are carried out by students:

Problem analysis and requirement capture : understanding the problem to solve and its relevance.

Organization and planning : dividing the whole problem into subproblems.

Solution design : programming the solution.

Solution validation : testing the solution in the robot.

Presentation of results and documentation : showing the final response, submitting the solution, and answering whatever question from the teacher.

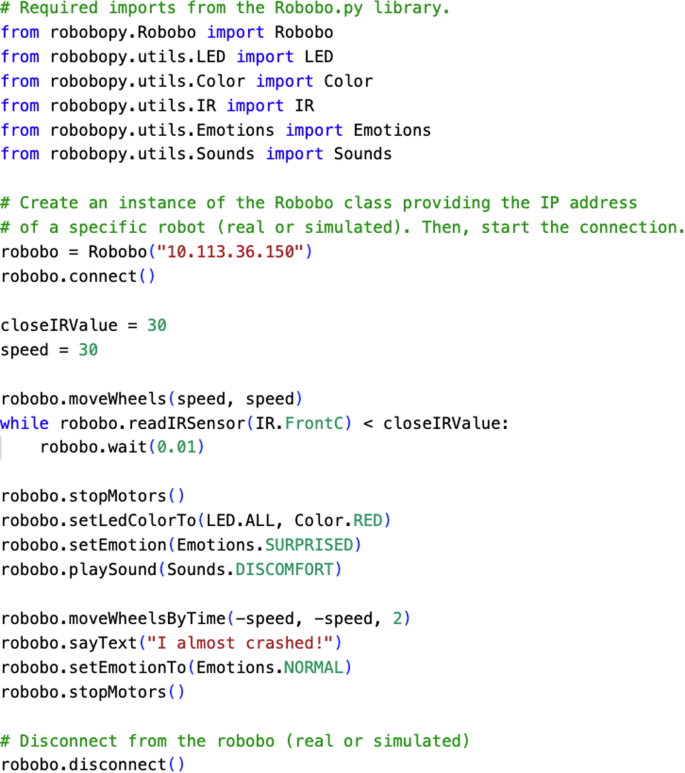

The teacher’s role in each of these steps is very relevant, as he/she must monitor the students’ advance and solve their questions, which can be open as this type of project allows for different valid solutions. The teacher must evaluate the final solution of the student, which can be based on the robot’s performance, on the code, on the progress and attitude, or on a specific exam or test. In terms of the specific background on AI topics, teachers should have previous training on them according to the educational level, which is out of the scope of the Robobo Project. In the case of secondary school teachers, to support them in this new discipline, the TUs developed in the realm of the AI+ project make up a teacher guide, with a recommendation about the theoretical contents to be taught, a possible organization of the TU into activities and tasks, and all the code solutions to the challenge. As an example, Fig. 8 displays the organization of a TU focused on natural interaction with Robobo that is included in the teacher guide. It can be observed how the division of the final goal into activities and tasks is provided, including an initial stage for theoretical contents.

An example of TU organization that is provided to teachers in a project based on Robobo.

Experimental results. Robobo use cases with students

The Robobo Project has been validated in several training activities since 2017 in different European countries, from secondary school to master’s degree, both in informal (workshops) and formal education (official curricula). As commented above, in secondary school and high school, it has been used in the format of short sessions in the realm of the AI+ project. At University level, in Spain, it was applied in the Industrial Engineering degree and master at University of A Coruña, from 2019/20 academic year onwards, in general subjects as “Informatics” and other more specific as “Intelligent Robotics” or “Mobile Robotics”. In Nederland, the VU University of Amsterdam has also been using Robobo in the “Learning Machines” course of the Faculty of Sciences (Miras 2024 ) since 2018/19. Apart from official subjects, it has been applied in more than ten final degree and master projects at the University of Coruña (Spain) since 2018 to 2022, focused on advanced features like computer vision using Deep Learning (Esquivel–Barboza et al. 2020 ) or multirobot coordination using machine learning (Llamas et al. 2020 ).

The following three sections will describe the results obtained on specific use cases for (1) secondary school, (2) initial level of higher education, and (3) master education level. In the three use cases, the validation instruments of the implementation were the same: online questionnaires filled by students at the completion of the task or lesson. Through this validation stage, two research questions are faced:

RQ1: Is the Robobo Project a suitable tool for learning the fundamentals of AI in the long-term?

To know if the tool is perceived as another educational robot for learning about technology or as a more formal and powerful resource.

To know if the project is perceived as an educational framework that could be used in several courses throughout the academic life.

RQ2: Is the methodological approach and the materials adequate to learn intelligent robotics?

To know if the teaching resources are adequate to each education level.

To know if the use of the student’s smartphone for didactic could be problematic.

To know if the teaching approach based on simulated/real robot is well perceived by students.

Regarding the general profile of the students who participated in this study, at all three levels of education we had students from different countries, mainly from Europe and Latin America (Fig. 9 ). This introduced more variability in students’ background on programming, digital education, or AI topics. Furthermore, Table 4 shows the ratio between female and male students along with their age ranges. As it can be observed, there is a majority of male students, in line with the usual statistics in the fields of computer sciences and engineering, within these geographic areas.

Students’ country of origin.

Secondary school

This use case summarizes the overall impression of teachers and students about Robobo in the scope of the AI+ project (AI+ ( 2019 )). The goal was training students on the seven fundamental topics of AI:

Perception and actuation in AI, corresponding to TU7, TU8, and TU10 of the AI+ curriculum.

Natural interaction , corresponding to TU9 of the AI+ curriculum.

Reinforcement learning , corresponding to TU13 of the AI+ curriculum.

Representation (computer vision) , corresponding to TU12 of the AI+ curriculum.

Reasoning (path planning) , corresponding to TU14 of the AI+ curriculum.

Collective AI (multiagent system) , corresponding to TU17 of the AI+ curriculum.

Social Impact (AGI and cognitive robotics) , corresponding to TU15 of the AI+ curriculum.

The specific questionnaires related to RQ1 and RQ2 were filled in the final training activity of the AI+ project, held in Slovenia in May 2022, in which participated 30 students and 12 teachers. Starting with RQ1, representative results are displayed in the two graphs of Fig. 10 . The left one was answered only by teachers, and it shows how they rate the importance of the different topics covered in the AI+ curriculum by their relevance for the students’ training. All these topics were covered with the TUs commented above.

Teachers (left) and secondary school students’ (right) answers to sub questions of RQ1.

As it can be observed, machine learning and the social impact of AI are the top ones, but perception and actuation are the following, above representation and reasoning. These results reinforce both the idea that even teachers that are not experts in AI topics easily understand the intelligent agent approach to AI developed along the different TUs and the current proposal of using robotics as a key application field to learn about AI. On the other hand, the right graph of Fig. 10 displays the results of a questionnaire with multiple choices, answered only by students, and it shows their perspective of the robot’s capabilities for learning about intelligent robotics. Most students highlight the possibility of using computer vision and support HRI, two completely new features for them when the project started.

Regarding RQ2, two questions were passed to teachers in the final activity (Fig. 11 ). Figure 11 left is related to the use of Python in secondary school. The results show how teachers have an overall positive opinion of it, considering that it is adequate for learning AI. Regarding its complexity, the opinions are divided, encountering teachers who consider that Python could be integrated within the AI curriculum, but others consider that it should be learned separately, due to its complexity. However, none of them consider that Python is too complex to not be addressed in secondary and high school. Figure 11 right summarizes their opinion with regards to use of the smartphone in Robobo. All participants express a favourable point of view, though some believe that dedicated devices are preferable instead of the own smartphone of the students. This preference proceeds not only from potential technical complications arising from using various smartphones but also from ethical considerations related to performance disparities between inexpensive and costly devices when carrying out tasks.

Teachers’ answers to sub questions of RQ2.

University (degree level)

This second use case corresponds to a specific activity proposed to students in the “Informatics” subject of the 1st year of the Industrial Engineering degree at UDC during the course 2022/23. This subject is focused on learning the fundamentals of programming, using the Python language, during a period of four months. Traditionally, students had to solve different programming exercises, which focus on learning the algorithmic and structural aspects of the language, avoiding the problems derived from more advanced and realistic applications. However, it has been shown that such a methodology decreases students’ interest and motivation for the topic, mainly in degrees different from computer science, where students are more interested on practical issues (Fontenla–Romero et al. 2022 ).

Specifically, the following activity was proposed within this subject: To develop a Python program so Robobo can grab a red cylinder in a closed environment independently of its initial position . The activity was carried out in asynchronous fashion, so teachers proposed it and students had two weeks to solve it autonomously (homework). In this period, they were tutored on demand, but encouraged to use the Robobo documentation and solve the issues on their own. None of the students had previous experience with AI or robotics, and the main goal of the activity was to evaluate their programming skills developing a feasible and usable solution. Consequently, they had to learn about sensors (distance, colour) and actuators (motors and speech) to create an autonomous response on the robot. At the end of the activity, they also had to carry out a small presentation of their solution to the teachers. The total number of participants in the activity was 56, with ages ranging 18–20, who answered the survey after the deadline date.

Two representative questions related to RQ1 are displayed in Fig. 12 . The left one shows how students perceive that solving the proposed problem is closer to their work as engineers (only 14.5% of answers are 1 and 2), even with the childlike appearance of the robot, which could seem inadequate for this age. The right question reinforces the original claim about this practical methodology and the focus on validation aspects of a program when learning the fundamentals of programming (64.3% of students’ answers are 1 or 2, meaning disagreement with the use of traditional challenges).

1 means totally disagree while 5 means totally agree.

In Fig. 13 we can observe students’ answers about two questions related with RQ2. The left one is very important for this research question, as it is a typical problem of educational robotics: students put too much effort on solving practical issues related to robotics and they lose the point from the goal of learning about programming. In this case, the Robobo Python library and its integration with RoboboSim seems to be adequate for students according to their answers (only 12.7% of negative feedback). It must be pointed out here that the “level of realism” of the simulator was set to the minimum, to simplify programming. Regarding the right question of Fig. 13 , it clearly shows that most students consider the library documentation adequate and feel that it was helpful for the programming of the activity (78.2%).

First year university students’ answers to sub questions of the RQ2.

University (master level)

As in the previous section, these results correspond to specific subjects at master level in course 2022/23. In particular, five students from the “Industrial Robotics” course of the 4th level at the Industrial Technologies Engineering degree from the UDC, and 28 students from the “Intelligent Robotics and Autonomous Systems” course in the master’s degree on Industrial Informatics and Robotics from the UDC participated in this use case. The students belong to two different groups, but their background skills in terms of programming and mathematics were similar.

In both courses, students learned about the fundamentals of intelligent robotics, mainly covering perception, actuation, representation, and reasoning. In these subjects, the proposed challenge is focused on the architectural aspects of intelligent robotics, that is, how to organize the control system to achieve the desired autonomy in a feasible and modular fashion. The difference between reactive, deliberative and hybrid approaches are trained with detail.

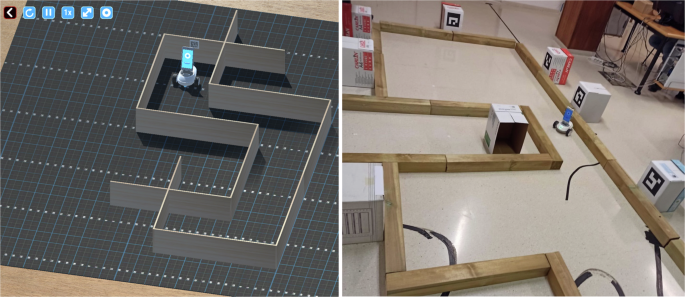

The students were organized in pairs, and they had a period of 8 weeks to solve an intelligent robotics challenge with the following goals:

To define a practical goal for Robobo in one of the environments of RoboboSim. This point implies defining also the set of environmental rules that condition the robot’s behaviour. For example, students can specify that blue objects “burn” and the Robobo should avoid them, or that Robobo cannot touch walls or collide with objects.

To implement and test a reactive or hybrid architecture with at least four autonomous behaviours. The architecture must allow Robobo to act in the proposed environment to fulfil its objectives independently of its initial position or orientation, and independently of the fact that the positions of some objects change too.

Developing the proposed architecture implied: (1) Sensing: Using infrared, encoders and gyroscope for navigation and localization (odometry), as well as computer vision for guidance; (2) Actuation: Using the wheel and pan-tilt unit motors for actuation, implementing specific control programs for each behaviour, using a PID or a similar approach; (3) Representation: To develop an appropriate internal representation for the state space that could include a map and (4) Reasoning; the main goal was on the design and implementation of the decision architecture, to select the most adequate action on each case. Students could program independent behaviours “by hand” or use some machine learning approach (like reinforcement learning).

The final assessment was obtained after showing the correct response of the robot in simulation and, as extra value, in the real robot. The validation in the real robot was performed by all students, although the adjustment period was not enough to obtain reliable responses. Figure 14 contains one example of scenario selected and solved by students, both in simulation (left) and real world (right). In this case, the goal consisted of creating autonomously a map of the environment using the tags for the own robot location, with the aim of performing later operations in an optimal fashion.

Example of problem solved by master level students in simulation and real robot.

In this case, the answers provided by students are shown in Figs. 15 and 16 , corresponding to representative topics for RQ1 and RQ2. Left question on Fig. 15 relies on the concept that, even with the simplistic aspect of the robot, students clearly perceive it as an adequate tool for learning about intelligent robotics in a technical scope. Figure 15 right reinforces a key idea for specialized students, who must understand that not only complex sensors as cameras or microphones are required for AI, but also basic ones as distance or orientation sensors.

Master students’ answers to sub questions of RQ1. DK/DA means Don’t Know or Don’t Answer.

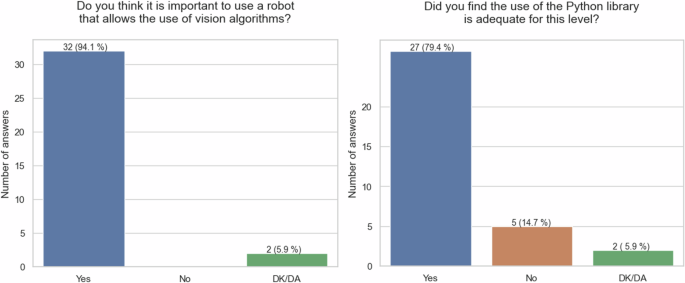

Master students’ answers to sub questions of RQ2. DK/DA means Don’t Know or Don’t Answer.

In terms of RQ2, left graph of Fig. 16 displays that the students valued as important the use of a robot that allowed them to use computer vision algorithms. This is very relevant for RQ2, as these students could require low level libraries and resources, so they have a higher control level of the robot. In this line, Python language provides a natural advantage as it allows to include external libraries to perform advanced processes, as computer vision ones, but also path planning, machine learning, or speech recognition. Regarding the documentation, the answers to the right question in Fig. 16 show that the students found it easy to use and adequate, even at this high educational level.

Analysis and discussion

The results obtained during six years of intensive use of the Robobo Project in real classrooms, directly through the questionnaires filled by students and teachers, as well as the indirect results extracted from them, allow us to state that the project responds affirmatively to the hypotheses raised in both RQs. Regarding RQ1, we can affirm that Robobo was shown to be an appropriate tool to support AI literacy in the long-term. It was applied at different educational levels to train core AI concepts of different difficulty with success. A major part of this achievement was due to the adapted resources and materials included in the Robobo project, which have been perceived as highly useful by students and teachers, as extracted from their positive answers to RQ2 too.

In secondary school, the Robobo project has been widely tested in European schools with novel students and teachers, with no previous experience on AI and with a basic background in digital education and programming. In this scope, the usability and simplicity of the project resources, as the RoboboSim, the Scratch blocks, or the documentation, have shown to be adequate for this initial level. Although the main part of the students’ work was carried out in simulation in this level, the ease of transfer to the real robot was also very engaging and motivating for students, that could try their solutions in the real world. It is especially interesting the positive feedback obtained from teachers in relation with the teaching units and the suitability of the robot to support AI topics learning. This is a very relevant result for the project team, as simplifying the advanced AI tools and methods commonly used in the field could result in inadequate materials, for being too simplistic or too complex for students without a technical background.

At university level, the students have also provided a positive response regarding the use of the Robobo Project as a tool to learn intelligent robotics in a more technical scope. The possibility of using Python is one of the main reasons behind such success. First, the robobo.py library was positively valuated by students due to the functionalities it provides, from high level perception functions to direct stream of sensorial information. Second, the Python compatibility with third party AI libraries allowed advanced students to integrate state of the art algorithms and methods in their programs easily. In terms of simulation, the RoboboSim was not perceived as too basic for technical development, as students could use the random mode in the worlds and enable the high realism degree to make the problem more challenging. In addition, for these students, the simulator was seen just an initial step towards developing the solution of the task in the real robot, which was the main goal on this educational level. University students also considered the documentation to be helpful to support their autonomous work and learning.

After this period of six years and all the educational experiences carried out using the Robobo robot for AI education, it is important to conclude this discussion by comparing it with the two main competitors in this scope: the Thymio and the Fable robots. Table 5 summarizes the 7 key features established in Section “Literature review” for an educational robot to support formal AI literacy. As it can be observed in the table, and as it has been shown throughout the paper, the Robobo robot accomplishes all of them, as they were part of the design specifications. In the case of the Thymio robot, it is limited in terms of advanced sensing (mainly camera), advanced actuation (LCD screen) and computational power, making this robot not adequate for learning about some key topics on AI like computer vision, natural interaction, or reinforcement learning. However, this platform is a great option for some topics, mainly in terms of price/quality ratio, but it must be backed with other type of robot to cope with AI education in a global frame. Regarding the Fable, it lacks a specific simulation software, which is an important drawback for class organization, rate of progress, and investment. The rest of features are supported, so it can be used for proper AI learning in real setups.

But the main advantage of Robobo for long-term education on AI, as compared to the other two robots, is on the existing teaching resources, point 7 on the table. Its resources are fully aligned with the official recommendations for AI literacy explained in this paper, which is not the case on the competitors. As a consequence, teachers can start using the robot in the short-term for their classes with confidence. In one hand, they have open access to reliable materials to be directly applied, and on the other, the potentiality of the available resources provides them with a long range of capabilities to develop new teaching units and materials.

In this realm, it must be highlighted that all Robobo Project users provided similar feedback when it comes to the future possibilities of the robot, showing that they perceive to be using just a small part of them. This is very relevant for the project, as it reinforces the core idea of combining a simple mobile base with a smartphone that is the element that is updated and supports new developments and improvements. Therefore, the future applications of the Robobo project are open, which is very important in the realm of AIEd.

Conclusions

The main contribution of this work has been the presentation of a reliable classroom tool to support AI literacy teaching at different educational levels. While policy makers are designing plans and curricula to introduce AI training in formal education, the researchers in the field of AIEd must carry out projects like the one shown here, in which specific tools for AI learning are tested in real classes with teachers and students, so useful conclusions can be obtained for the whole educational community, mainly for the mentioned policy makers. The social impact of this type of contribution is very relevant, as in education, piloting resources with students is a must.

The Robobo Project is based on the application of educational robots as optimal classroom resource to learn about the core AI topics under the intelligent agent approach, the one followed by the most relevant AIEd initiatives when it comes to AI literacy principles. Throughout this paper, it has been shown that a robot with adequate technical capabilities, together with software tools adapted to the educational level, and formal teaching units, can be successfully applied for AI training in the long-term in real courses. Teachers can use the robot in complete AI courses to face the five basic AI topics, or just for particular ones integrated into subjects like mathematics, informatics, or physics. The suitability of robots for the STEM approach is reinforced in this scope with their adaptation to AI literacy teaching, as AI is fitted also perfectly on STEM principles, increasing its applicability in many educational environments.

Finally, it must be clarified that, from the experience gained in the Robobo Project, we can conclude that not all types of educational robots are appropriate for AIEd. That is, the simple fact of developing challenges in the realm of autonomous robotics is not enough for students to learn about AI. Robots in this context must support specific AI features, like computer vision, speech recognition and production, tactile interaction, visual interfaces, high-computational power, and internet connection. In addition, we can also conclude that it is fundamental to develop materials that support the teacher, as AI is a new field for most of them, and having quality resources for them is the only way to ensure a proper advance in AIEd.

Data availability

The data obtained in the students’ and teachers’ surveys are available at: https://github.com/GII/robobo-education-paper/tree/main/Surveys . The teaching units developed in the Erasmus+ projects are available at: https://aiplus.udc.es/results/ . https://aim4vet.udc.es/modules/ . The teaching units belonging to university degrees can be accessed at https://github.com/GII/robobo-education-paper/tree/main .

Code availability

All the Robobo libraries and source code can be downloaded from: https://github.com/mintforpeople/robobo-programming/wiki . https://github.com/mintforpeople/robobo.py .

https://github.com/mintforpeople/robobo-programming/wiki/Blocks .

https://github.com/mintforpeople/robobo-programming/wiki/python-doc .

https://github.com/mintforpeople/robobo-programming/wiki/Unity .

https://github.com/mintforpeople/robobo-programming/wiki .

http://education.theroboboproject.com/en/scratch3/sample-projects .

AI at code.org (2023) Anyone can learn Computer Science. https://code.org/ai . Accessed 11 Oct 2024

AI+ (2019) AI+ Project Results. https://aiplus.udc.es/results/ . Accessed 11 Oct 2024

AI4K12 (2023) The Artificial Intelligence for K-12 initiative. https://ai4k12.org/ . Accessed 11 Oct 2024

Amsters R, Slaets P (2020) Turtlebot 3 as a Robotics Education Platform. In: Merdan M, Lepuschitz W, Koppensteiner G, et al. (eds) Robotics in Education. Springer International Publishing, Cham, pp 170–181

Ananiadou K, Claro M (2009) 21st Century Skills and Competences for New Millennium Learners in OECD Countries. https://doi.org/10.1787/218525261154

Anwar S, Bascou NA, Menekse M, Kardgar A (2019) A systematic review of studies on educational robotics. J. Pre-Coll. Eng. Educ. Res. (J.-PEER) 9:2

Google Scholar

Aranda MR, Estrada Roca A, Margalef Martí M (2019) Idoneidad didáctica en educación infantil: matemáticas con robots Blue-Bot. EDMETIC 8:150–168. https://doi.org/10.21071/edmetic.v8i2.11589

Article Google Scholar

Bellas F, Guerreiro-Santalla S, Naya M, Duro RJ (2022) AI Curriculum for European High Schools: An Embedded Intelligence Approach. Int. J. Artif. Intell. Educ. https://doi.org/10.1007/s40593-022-00315-0

Bellas F, Naya M, Varela G, et al. (2017) The Robobo project: bringing educational robotics closer to real-world applications. In: International Conference on Robotics and Education RiE 2017. Springer, pp 226–237

Bezerra Junior JE, Queiroz PGG, de Lima RW (2018) A study of the publications of educational robotics: a systematic review of literature. IEEE Lat. Am. Trans. 16:1193–1199. https://doi.org/10.1109/TLA.2018.8362156

Brew M, Taylor S, Lam R, et al. (2023) Towards developing AI literacy: three student provocations on AI in higher education. Asian J. Distance Educ. 18:1–11

Castro E, Cecchi F, Valente M, et al. (2018) Can educational robotics introduce young children to robotics and how can we measure it. J. Comp. Assist. Learn. 34:970–977. https://doi.org/10.1111/jcal.12304

Chen X, Zou D, Xie H, et al. (2022) Two decades of artificial intelligence in education: contributors, collaborations, research topics, challenges, and future directions. Educ. Technol. Soc. 25:28–47

Chu S-T, Hwang G-J, Tu Y-F (2022) Artificial intelligence-based robots in education: a systematic review of selected SSCI publications. Computers Educ.: Artif. Intell. 3:100091. https://doi.org/10.1016/j.caeai.2022.100091

Darmawansah D, Hwang G-J, Chen M-RA, Liang J-C (2023) Trends and research foci of robotics-based STEM education: a systematic review from diverse angles based on the technology-based learning model. Int. J. STEM Educ. 10:12. https://doi.org/10.1186/s40594-023-00400-3

Dignum V, Penagos M, Pigmans K, Vosloo S (2021) Policy guidance on AI for children. Unicef. https://www.unicef.org/innocenti/reports/policy-guidance-ai-children . Accessed 11 Oct 2024

Esquivel-Barboza EA, Llamas LF, Vázquez P, et al. (2020) Adapting computer vision algorithms to smartphone-based robot for education. In: 2020 Fourth International Conference on Multimedia Computing, Networking and Applications (MCNA). pp 51–56

Farias G, Fabregas E, Peralta E, et al. (2019) Development of an easy-to-use multi-agent platform for teaching mobile robotics. IEEE Access 7:55885–55897. https://doi.org/10.1109/ACCESS.2019.2913916

Fontenla-Romero O, Bellas F, Sánchez-Maroño N, Becerra JA (2022) Learning by Doing in Higher Education: An Experience to Increase Self-learning and Motivation in First Courses. In: Gude Prego JJ, de la Puerta JG, García Bringas P, et al. (eds) 14th International Conference on Computational Intelligence in Security for Information Systems and 12th International Conference on European Transnational Educational (CISIS 2021 and ICEUTE 2021). Springer International Publishing, Cham, pp 336–345

Global Education Monitoring Report Team (2023) United Nations Educational, Scientific and Cultural Organization. https://www.unesco.org/gem-report/en/technology . Accessed 11 Oct 2024

Gomoll AS, Hmelo-Silver CE, Tolar E, et al. (2017) Moving Apart and Coming Together: Discourse, Engagement, and Deep Learning. J. Educ. Technol. Soc. 20:219–232

Heinerman J, Rango M, Eiben AE (2015) Evolution, Individual Learning, and Social Learning in a Swarm of Real Robots. In: 2015 IEEE Symposium Series on Computational Intelligence. pp 1055–1062

Hornberger M, Bewersdorff A, Nerdel C (2023) What do university students know about Artificial Intelligence? Development and validation of an AI literacy test. Computers Educ.: Artif. Intell. 5:100165. https://doi.org/10.1016/j.caeai.2023.100165

Huang C, Zhang Z, Mao B, Yao X (2023) An Overview of Artificial Intelligence Ethics. IEEE Trans. Artif. Intell. 4:799–819. https://doi.org/10.1109/TAI.2022.3194503

ISTE (2023) Artificial Intelligence in Education. https://www.iste.org/areas-of-focus/AI-in-education . Accessed 11 Oct 2024

Kivunja, C. (2015) Exploring the Pedagogical Meaning and Implications of the 4Cs “Super Skills” for the 21st Century through Bruner’s 5E Lenses of Knowledge Construction to Improve Pedagogies of the New Learning Paradigm. Creative Education, 6, 224–239. https://doi.org/10.4236/ce.2015.62021

Konijn EA, Hoorn JF (2020) Robot tutor and pupils’ educational ability: teaching the times tables. Computers Educ. 157:103970. https://doi.org/10.1016/j.compedu.2020.103970

Laupichler MC, Aster A, Schirch J, Raupach T (2022) Artificial intelligence literacy in higher and adult education: a scoping literature review. Computers Educ.: Artif. Intell. 3:100101. https://doi.org/10.1016/j.caeai.2022.100101

Lee I, Ali S, Zhang H, et al. (2021) Developing Middle School Students’ AI Literacy. In: Proceedings of the 52nd ACM Technical Symposium on Computer Science Education. Association for Computing Machinery, New York, NY, USA, pp 191–197

Llamas LF, Paz-Lopez A, Prieto A, et al. (2020) Artificial Intelligence Teaching Through Embedded Systems: A Smartphone-Based Robot Approach. In: Silva MF, Luís Lima J, Reis LP, et al. (eds) Robot 2019: Fourth Iberian Robotics Conference. Springer International Publishing, Cham, pp 515–527

Machine Learning for Kids (2023) Machine Learning Projects. https://machinelearningforkids.co.uk/#!/worksheets . Accessed 11 Oct 2024

Miras K (2024) Learning Machines. https://studiegids.vu.nl/en/courses/2024-2025/XM_0061#/ . Accessed 11 Oct 2024

MIT (2024) Artificial Intelligence with MIT App Inventor. https://appinventor.mit.edu/explore/ai-with-mit-app-inventor . Accessed 11 Oct 2024

Mondada F, Bonani M, Riedo F, et al. (2017) Bringing robotics to formal education: the thymio open-source hardware robot. IEEE Robot. Autom. Mag. 24:77–85. https://doi.org/10.1109/MRA.2016.2636372

Murphy RR (2019) Introduction to AI robotics. MIT press

Mussati A, Giang C, Piatti A, Mondada F (2019) A Tangible Programming Language for the Educational Robot Thymio. In: 2019 10th International Conference on Information, Intelligence, Systems and Applications (IISA). pp 1–4

Naya-Varela M, Guerreiro-Santalla S, Baamonde T, Bellas F (2023) Robobo SmartCity: an autonomous driving model for computational intelligence learning through educational robotics. IEEE Trans. Learn. Technol. 16:543–559. https://doi.org/10.1109/TLT.2023.3244604

Ng DTK, Leung JKL, Chu SKW, Qiao MS (2021) Conceptualizing AI literacy: an exploratory review. Computers Educ.: Artif. Intell. 2:100041. https://doi.org/10.1016/j.caeai.2021.100041

Pacheco M (2024a) Fable: Education Components. https://teacherzone.shaperobotics.com/en-US/home . Accessed 11 Oct 2024

Pacheco M (2024b) Fable: STEAM lab components. https://shaperobotics.com/thinken/ . Accessed 11 Oct 2024

Pacheco M, Fogh R, Lund HH, Christensen DJ (2015) Fable II: Design of a modular robot for creative learning. In: 2015 IEEE International Conference on Robotics and Automation (ICRA). pp 6134–6139

Pacheco M, Moghadam M, Magnússon A, et al. (2013) Fable: Design of a modular robotic playware platform. In: 2013 IEEE International Conference on Robotics and Automation. pp 544–550

Poole DL, Mackworth AK (2010) Artificial Intelligence: foundations of computational agents. Cambridge University Press

Renda C, Prieto A, Bellas F (2024) Teaching Reinforcement Learning Fundamentals in Vocational Education and Training with RoboboSim. In: Marques L, Santos C, Lima JL, et al. (eds) Robot 2023: Sixth Iberian Robotics Conference. Springer Nature Switzerland, Cham, pp 526–538

Rétornaz P, Riedo F, Magnenat S, et al. (2013) Seamless multi-robot programming for the people: ASEBA and the wireless Thymio II robot. In: 2013 IEEE International Conference on Information and Automation (ICIA). pp 337–343

Russell S, Norvig P (2021) Artificial Intelligence: A Modern Approach, 4th US ed

Sáez-López J-M, Sevillano-García M-L, Vazquez-Cano E (2019) The effect of programming on primary school students’ mathematical and scientific understanding: educational use of mBot. Educ. Technol. Res. Dev. 67:1405–1425. https://doi.org/10.1007/s11423-019-09648-5

Seckel MJ, Salinas C, Font V, Sala-Sebastià G (2023) Guidelines to develop computational thinking using the Bee-bot robot from the literature. Educ. Inf. Technol. 28:16127–16151. https://doi.org/10.1007/s10639-023-11843-0

Shin J, Siegwart R, Magnenat S (2014) Visual programming language for Thymio II robot. In: Conference on interaction design and children (idc’14). ETH Zürich

Sullivan A, Bers MU (2019) Investigating the use of robotics to increase girls’ interest in engineering during early elementary school. Int. J. Technol. Des. Educ. 29:1033–1051. https://doi.org/10.1007/s10798-018-9483-y

The Robobo Project (2024) The next generation of educational robots. https://theroboboproject.com/en/ . Accessed 11 Oct 2024

The University of Helsinki (2024) Elements of AI. https://www.elementsofai.com . Accessed 11 Oct 2024

Thymio robot (2024) Helping people to discover and practice digital technologies. https://www.thymio.org/ . Accessed 11 Oct 2024

TIOBE Index (2024) TIOBE Index for June 2024. https://www.tiobe.com/tiobe-index/ . Accessed 11 Oct 2024

UNESCO (2022) K-12 AI curricula: a mapping of government-endorsed AI curricula. https://unesdoc.unesco.org/ark:/48223/pf0000380602 . Accessed 11 Oct 2024

UNESCO (2023) Guidance for generative AI in education and research. https://www.unesco.org/en/articles/guidance-generative-ai-education-and-research . Accessed 11 Oct 2024

Weiss T, Reichhuber S, Tomforde S (2023) From Simulated to Real Environments: Q-Learning for MAZE-Navigation of a TurtleBot. In: Rutkowski L, Scherer R, Korytkowski M, et al. (eds) Artificial Intelligence and Soft Computing. Springer Nature Switzerland, Cham, pp 192–203

Xu W, Ouyang F (2022) The application of AI technologies in STEM education: a systematic review from 2011 to 2021. Int. J. STEM Educ. 9:59. https://doi.org/10.1186/s40594-022-00377-5

Download references

Acknowledgements